Table of Contents

Feature Article

The Cedars Project: Implementing a Model for Distributed Digital Archives

Kelly Russell

Cedars Project Manager

K.L.russell@library.leeds.ac.uk

and

Derek Sergeant

Cedars Project Officer

D.M.sergeant@library.leeds.ac.uk

Introduction

As part of the UK Electronic

Libraries Programme (eLib), the Cedars project has been funded to explore issues and

practical exemplars for the long-term preservation of digital

materials. The Consortium of University Research Libraries (CURL) in

the UK represents the major national, academic, and research

libraries in England, Wales, Scotland, and Northern Ireland. So, it

is well placed to lead in this relatively new and increasingly urgent

area. Cedars stands for "CURL Exemplars in Digital Archives," and the

project focuses on strategic, methodological, and practical issues

for libraries in preserving digital materials into the future. The

3-year project, which is in its second year, is led by the

universities of Oxford, Cambridge and Leeds. The UK Office of Library

Networking is also a partner in the project with a focus particularly

on metadata issues for long-term preservation.

One of the first challenges in establishing the project was the implementation of an agreed model or standard for archiving materials. If the demonstrator projects are to illustrate a scaleable distributed digital archive, technical development and implementation across all three sites must converge. This paper provides an overview of the Cedars distributed archive architecture that is based on an implementation of the OAIS reference model currently being developed through the International Standards Organization (ISO). (1) It will also touch briefly on the evolution of a preservation metadata specification, which is a key component of an effective digital archive. Cedars is also focusing on a number of other areas related to digital preservation, including technical approaches to preservation, intellectual property rights, and the copyright implications for preserving digital materials. However, these areas are beyond the scope of this paper, and information about them can be found at the Cedars Web site.

Terminology

For the sake of clarity, it is

important to define some of the terms that will recur in this paper.

For the purposes of the project, Cedars has defined digital

preservation as "storage, maintenance, and access to a digital object

over the long term, usually as a consequence of applying one or more

digital preservation strategies including technology preservation,

technology emulation, or data migration." (2)

A digital object, in this sense, is defined simply as any resource

that can be stored or rendered (i.e., made meaningful) by a computer.

Such a broad definition includes both resources that are digitized,

as well as objects that are "born digital." It is important to

understand that digital preservation is not concerned only (or even

primarily) with retrospectively digitized resources. For the short to

medium term, academic libraries and archives may continue to focus

attention on digital imaging; in the long term, preserving "digital

only" resources presents the most complex challenges. Because for

born digital materials, there may be no alternative but to rely on

the digital object. "Long term" in this context simply means long

enough to be concerned with the impacts of changing technologies, and

for the purposes of this article, should include timescales in years,

decades, or even centuries.

Digital Archiving - A Distributed

Model

The UK currently has a system for

the legal deposit of most non-digital material published in the UK.

Deposit is limited to six specific libraries: the British Library,

the National Libraries of Scotland and Wales, Cambridge University,

Oxford University, and Trinity College Dublin. Similar to the system

for print materials, future legislation for digital resources will

almost certainly be based on a distributed system composed of several

repositories. The adoption of standard approaches to management of a

digital archive is critical - even more so than for archiving

non-digital resources. For instance, in a digital archive system,

there may be only one copy deposited with a single repository within

the distributed archive that relies on interoperability and sharing

of resources among archives. Convergent development and

implementation across the repositories or archives is therefore

paramount.

The Open Archival Information System

(OAIS)

ISO has been collaborating with the

Consultative Committee for Space Data Systems on the development of a

standard framework for implementing a digital archive. The OAIS

reference model includes the complete range of functions within any

archive (whether digital or not): acquisition (referred to hereafter

as "ingest"), data storage, data management, administration, and

access (referred to as "dissemination"). The OAIS model provides a

useful framework including concepts and vocabulary that facilitates

clear and meaningful discussions and implementation of archival

repositories. Although developed initially for archiving geographical

information systems and satellite data, OAIS is intended to be

applicable to any archive. Cedars has been testing the applicability

of the OAIS model within the context of a distributed digital archive

of library materials.

Before describing the Cedars Architecture, which implements the OAIS model, it is worth noting that Cedars is also addressing issues external to the OAIS model. The OAIS model does not focus on the environment external to the archive, which includes key stakeholders such as producers, consumers, and management. In a Cedars context, these external factors must also be considered. For example, within Cedars certain "pre-ingest" activities (occurring before material is taken into the archive) have been recognized, such as negotiation with producers/copyright holders to agree upon regulating dissemination of digital objects. Similarly, various library management issues (such as criteria for selection of materials for archiving and other organizational issues relating to how digital preservation fits within the framework of library and information management) are also being addressed. The details of these external factors are beyond the scope of this paper.

For the purpose of this discussion, the Cedars Architecture can be seen as a framework that underpins a workable archive based on the OAIS model. This framework facilitates three different "information packages," each based on specific functions within the archive itself. Each information package (discussed in detail below) exists only in relations to archival functions: submission, archiving, and dissemination. It contains two elements:

Metadata that describes the software and systems necessary to make the data useable is referred to as "representation information," while the metadata that describes an object's preservation status (e.g., its provenance, its context, etc.) is referred to as "preservation description information." Making a clear distinction between these two types of metadata within the OAIS model is not always easy. Within Cedars, there are ongoing discussions about what metadata constitutes representation information, and what is included in the preservation metadata. Indeed, this may vary depending on the nature of the object to be preserved. Perhaps the distinction is more clear for GIS data, which constitute the basis for the OAIS model. However, for complex multi-media materials found in library collections, the distinction can be blurry. Cedars plans to use its practical exemplars based on specific digital library resources to better understand this distinction.

Archival Information Packages (AIPs)

AIPs encapsulate preserved digital

objects, and are physically stored in the archive. For ease of media

independence, an AIP is handled as a byte stream, however, the

metadata elements can be stored by reference rather than being placed

within the AIP (i.e., each digital object is stored as a byte stream

along with pointers to metadata that allows their rendering and

describes their preservation process). In the case of representation

information metadata, which provides a rendering scheme for the

digital object, a whole network of references may be used to link

each AIP with the relevant resources. For example, a PDF file would

require storage of the byte stream itself as well as a "rendering

scheme" that would include the version of PDF, and information about

the required software and hardware. By keeping the rendering tools

separate from the digital object itself, the archive can update the

representation network without trawling through every AIP and

incorporating the change. This allows future improvement of the

archive as new tools can be plugged into the archive as they become

available. For example, if an archive previously relied on 286

machines (an early PC) for rendering some of the archive content,

these might be replaced by a 286 emulator as it comes available. If

the rendering tools are stored separately from the digital objects,

then they can be seamlessly inserted into the representation network

without altering the AIP itself.

Submission Information Packages

(SIPs)

The OAIS model suggests that to

usefully preserve a digital object, that object should be fully

understood. That is, without meaningful retrieval or rendering, there

is no preservation. As a digital object is submitted to an archive,

sufficient information needs to be included in SIPs during the ingest

phase. Cedars proposes that "the level of abstraction" of the digital

object should be identified to enhance the understanding of the

digital object. This is a particularly useful approach for complex,

multi-layered objects. The first resource Cedars has taken through

the process, a CD-ROM release of Chaucer's The Wife of Bath,

has been provided by the Cambridge University Press, which is one of

the project participants. For this CD-ROM, the underlying digital

object could be seen either as an SGML document, or as a PC-based

piece of software that runs using the Dynatext-viewer included on the

same CD-ROM. Alternatively, taking the abstraction further, the

underlying layer can be seen as a set of pages of text that can be

navigated in a well-defined manner. Identifying the level of

abstraction also allows the formulation of recommendations about the

"best" or "most suitable" choices for an object's preservation and

management within the OAIS.

Dissemination Information Packages

(DIPs)

The OAIS model includes an access

component, which relies on a system of metadata that Cedars may

include as part of the PDI (preservation description information) for

resource discovery or searching and retrieval of material from the

archive. DIPs contain the resultant digital object responding to a

user's request based on the search and retrieval metadata. It only

carries the elements of metadata that are relevant to retrieving and

rendering the digital object on the requested platform. The

implementation decisions made about the AIP and SIP heavily

influenced how DIPs are utilized. For example, if you have a set of

image files digitized and stored as uncompressed TIFF images, the

archive might choose to store enough information within the archive

to also deliver those images as JPEGs via a Web browser. The DIP

would therefore be the images themselves rendered as JPEG files.

Metadata elements may need to be tailored depending on the

preservation approach (migration or emulation) taken to render the

DIP. In the case of image files, migration is likely to be the

preferred preservation strategy, this will have an ongoing impact on

the option for dissemination. DIP usage restrictions will adhere to

the access terms negotiated with the rights holder when the material

is taken into the archive.

Archive Administration and Data

Management

Having looked at the three information packages, the remaining

function of the OAIS model concerns data management and the overall

administration of the archive. It is critical to ensure that digital

object integrity is adequately maintained, involving activities

ranging from controlling the objects within the archive (security) to

running a validation and media refreshment program. An archive might

also wish to manage system configuration (audits, performance, and

usage monitoring).

The diagram below may help to illustrate the flow of materials into and out of the archive as it is envisaged by the Cedars Project. This is based on the OAIS concepts but slightly simplified.

Metadata for Preservation

As has been implied in the

discussions of the Cedars architecture and the OAIS model, metadata

that describes the archived resources is a critical part of effective

preservation. Metadata is necessary not only for resource searching,

discovery and location, but also for describing the technical nature

of the resources (and associated systems) as well as the associated

preservation activities that may have taken place. Until recently,

most metadata research has focused on the "resource discovery"

applications. However, there are a whole host of other metadata

elements that can support activities, such as rights management and

preservation. These are key aspects of implementing a digital archive

for Cedars.

The OAIS reference model and RLG's efforts in defining preservation metadata have provided a springboard for Cedars in developing detailed and scaleable metadata specifications to support the preservation process. Factors that impact the selection and "granularity" of the metadata include:

Cedars will prepare a general specification that will be "cross-walked" through various types of digital resources. Although a general model will prove useful, it is through its practical application to specific digital resources that the Project expects to learn the most.

Conclusions

The OAIS model provides a useful and scaleable framework for the

implementation of archives across a number of repositories. An

archive built around library collections and resources presents its

own complexities and challenges. The Cedars project is one of the

first projects to attempt an implementation of OAIS in this context.

The OAIS model does not make assumptions about what preservation

strategy will be adopted, but that is a key issue in determining the

costs and resources required for archiving. Through the Cedars

project, CURL plans to explore questions specific to different

preservation strategies. However, as has been suggested in this

paper, the implementation of a standard approach to the management of

a distributed archive is the first and possibly the most important

step.

Footnotes

1. The Cedars Architecture, first

introduced in a paper by Dr. David Holdsworth, is available from the

Cedars Web site at http://www.curl.ac.uk/projects.shtml.

2. Cedars has adopted the definition of "migration" that was offered by the RLG/CPA report on digital preservation (Preserving Digital Information: Report of the Task Force on Archiving of Digital Information, Commission on Preservation and Access and The Research Libraries Group, Inc., May 1, 1996, http://www.rlg.org/ArchTF/). Migration is "...a broader and richer concept than 'refreshing.' ... It is a set of organized tasks designed to achieve the periodic transfer of digital materials from one hardware/software configuration to another, or from one generation of computer technology to a subsequent generation. The purpose of migration is to preserve the integrity of digital objects; and to retain the ability for clients to retrieve, display, and otherwise use them in the face of constantly changing technology."

Technical Feature

Tools and Techniques in Evaluating Digital Imaging Projects

Robert Rieger, Assistant Director, Human-Computer Interaction

Group, Cornell University

rhr1@cornell.edu

and

Geri Gay, Professor, Department of Communication and Director,

Human-Computer Interaction Group, Cornell University

gkg1@cornell.edu

Cultural institutions that are making digital image collections available via the Web consistently report large numbers of users. The New York Public Library, for instance, claims ten million hits on its Web sites each month, as opposed to 50,000 books paged in its reading room at 42nd Street. (1) Although these numbers are impressive, what can one really conclude about the value and utility of these digital image collections? An essential effort of any digital initiative is to evaluate the effectiveness and usability of the delivery system, including its interface, ease of use, and the value of the information provided for users. The purpose of this article is to describe different methodologies and techniques that can be employed in evaluating Web sites that provide access to digitized collections. Assessing the impact of digital image collections can be difficult due to the challenges of gathering, organizing, and sharing useful data. Media- and technology-rich environments, such as cultural Web sites, demand equally rich data collection and analysis tools that are capable of examining human-computer interactions. After providing an overview of the key issues and definitions, this article describes several tools and techniques helpful for collecting and analyzing data within online environments. The information presented is based on the Human-Computer Interaction (HCI) Group's (formerly Interactive Media Group) involvement in the evaluation stage of a number of digital imaging initiatives, including Making of America I, Making of America II, Museum Education Site License Project, Global Digital Museum, and the Art Museum Image Consortium (AMICO) University Testbed (2). For additional information on evaluation and social science research methods, the following Web sites offer excellent primers: Professor Bill Trochim's Center for Social Research Methods and the American Evaluation Association. A set of evaluation guidelines for a specific library evaluation project are available from The Tavistock Institute.

1. Evaluation Framework

Evaluators can no longer consider technological devices in isolation,

but must develop a functional understanding of how the technology is

used within a particular context, such as the use of an image

database in supporting a curriculum. Thus, current approaches to the

evaluation of interactive systems are broadening to include

multifaceted methods of data collection and analysis. This approach

requires considering the interactions among the various groups that

are working to define and develop digital

environments.(3) The authors encourage a

framework for evaluation based on a "social construction of

technology" (SCOT) model, which considers the multiple social

perspectives surrounding the development of new

technologies.(4) The SCOT model encourages

evaluators to consider the interactions and complexities among the

various groups that are working to define and develop digital

environments. Simple measurements of technological performance (e.g.,

number of logins) are inadequate, especially when isolated from data

about the social structure within which the systems are designed or

for which they are planned. Digital imaging evaluation projects at

the HCI Group rely on different kinds of data from a variety of

sources, such as usage statistics, interviews with experts, and user

focus groups. This multi-faceted approach is called "triangulation,"

and the findings that are based on it tend to be richer, better

substantiated, and more useful to readers.(5)

Another important principle that guides the work of the HCI Group is

to provide "thick description," which indicates a very detailed

representation of the information-seeking process, including, for

example, the work context of users.(6)

The HCI Group also strives to make use of technology to evaluate technology. Researchers and evaluators have experimented with and relied upon computers in evaluations of multimedia environments for many years. Early computer tracking systems allowed inquirers to record system usage, alert developers of problem areas, and save a record of the interaction for later use. Today's approaches have similar functions, in addition to the usefulness of intersecting multimedia, network, and participatory environments. They not only allow evaluators to gather data from Web sites as they are being used, but also make possible the integration of other data as well as observations, interviews, documents produced, and video and audio recordings. The technologies also support evaluators as they analyze the data and develop their interpretations.

2. Evaluation Choices

Although all successful evaluations involve a mixed-methods approach,

the authors have found it useful to characterize their approach in

terms of the dimensions presented in Table 1 and elaborated below. A

general rule is to plan an overall evaluation strategy that, at one

point or another, pulls from both ends of each dimension.

|

Dimension |

| ||

|

Types of Measurement |

Observing user interactions |

|

Collecting user opinions |

|

Test Audiences |

Expert developers |

|

End-users |

|

Presence of Evaluator |

Obtrusive |

|

Unobtrusive |

|

Timing of Data Collection |

Synchronous |

|

Asynchronous |

|

Type of Data |

Quantitative |

|

Qualitative |

|

Timing of Analysis & Reporting |

Synchronous |

|

Asynchronous |

Types of Measurement: The two basic types of measurement are 1) observing user interactions with the system, and 2) collecting user opinions about the system. User interactions can be tracked via a computer, recorded using videotape or screen recorders, or charted by an observer. User opinions can be gathered by a variety of means, including prepared surveys (administered by computer or on paper), focus groups, and one-on-one interviews. A hybrid set-up involves the audio or videotaping of testers as they provide a verbal description of both their actions and opinions. The Group frequently uses this approach in both evaluation and research studies, such as a recent one involving user reactions to visual search tools. The measurements are covered more thoroughly in separate sections below.

Test Audiences: Audiences can be generalized as 1) experts or 2) end users. When evaluating an image Web site, experts include those professionals who are engaged in creating systems, such as programmers, system designers, librarians, archivists, and curators. End-users can be either members of the actual audience (users) for whom the system is designed, or "surrogates" who are demographically similar and share common motivations with end-users.

Presence of Evaluators: An obtrusive presence implies that the evaluator is directly noticed by the test user, such as when they sit beside each other during a talk-aloud test. An unobtrusive presence implies the opposite, that testers do not constantly notice an evaluator presence. Generally, the less obtrusive, the more natural the test is considered.

Timing of Data Collection: Information and feedback can be collected either during the test of the system (synchronous) or afterwards (asynchronous). Synchronous data collection has the advantage of being more immediate and reflexive, while asynchronous provides testers a more typical usage environment and allows more time for reflection

Types of Data: Data are usually thought of as quantitative or qualitative, and include numbers, text, recordings, documents, etc. In the Global Digital Museum test project, for instance, the HCI Group relied on interviews, focus group sessions, user surveys, and usage data for evaluating user experiences.

Timing of Data Analysis & Reporting: Thanks to new technologies, data analysis and reporting can now take place in a more dynamic and synchronous fashion. Traditional methods of analyzing and reporting after all data have been collected are considered asynchronous. As will be discussed below, The HCI Group increasingly relies on a synchronous approach that integrates data collection with analysis and reporting.

3. Observing User Interactions: Tracking Tools and Other

Background Measurements

This section explores the goals and implementation strategies for

using "naturally-generated" or "tracking" data and other unobtrusive

measures from the computer as part of a program to assess on-line

environments. One of the great advantages to computers is that the

system leaves incredible amounts of documentation and performance

evidence. These tracks require no effort on the part of the test user

and minimal attention of the inquirer.

User-tracking refers to the monitoring of a user or groups of users according to how they function in an electronic environment. Investigators can choose to run integrated or companion systems in the background that record the many interactions made by users. These systems can record meticulously the number of keystrokes, content items seen by the user, navigation strategies, and paths constructed through the program. For example, today's popular Web servers generate log files containing vast amounts of data about user navigation that evaluators can access either directly through a text editor, or through one of the many log file analysis packages commercially available (e.g, Surf Report, Web Manage (Net Intellect), WWW Stat, and Logdoor). Many server software packages also include some type of log analysis tool. Number and origin of site visitors, top requested pages or graphics files, server activity by time or date, and number of errors are among the many measurements available through the log file. By measuring length of time between selected links, the amount of time spent on individual pages can be estimated. Another common analysis is top requested page by average time spent on each page. An example of a usage report generated by WebTrends Log Analyzer can be found at Zoom Project Web site.

Although the data are usually aggregated to see general trends, log files can record information about individual users, such as their navigation patterns through a Web site. Using commercial analysis software, investigators can specify an individual's IP address in order to zero in on a particular user or computer. Another simple method for tracking individual users involves hiding "on-load" commands within an HTML file so that each time an action takes place some type of report is generated to an external file. This is useful for Web sites that contain dynamic HTML or JavaScript applications that do not necessarily report to log or other record keeping files. Similar to log files, investigators with access to user hardware can also view history (.hst) files or cache files for Web site usage monitoring. The HCI Group uses this method in controlled lab studies in which it is important to track every move made within a site. A recent study, for example, measured long- and short-term memory of banner advertisements in Web sites, requiring a recording of every link testers chose within a system.

Other background measurements refer more directly to the successes and failures of users' interactions. Performance can be measured, for example, by the number of correct hits a user gets from a digital image collection Web site, or how efficiently a user recognizes the most logical path within a hyperlinked environment. User artifacts, such as postings to a networked bulletin board or communications with a site Webmaster, can be analyzed over time to reveal trends.

In addition to recording user sessions via audio and video means, investigators can rely on a computer-based recording device such as ScreenCam or SnagIt. Such recordings can be considered both an effort to track users and, if users are encouraged to comment as they move about the system, an approach at gathering user feedback. Video and audio records can be time-consuming to review; however, short clips can be powerful illustrations of user interactions. Web streaming technologies now allow for the sample clips to be delivered to evaluators alongside other data reports.

As previously noted, tracking data are most effectively used in tandem with other evaluation approaches. For example, a user's navigation pattern can be recreated into a visual "player piano" that can be used during an interview or focus group as a conversation stimulus. Or Web site usage statistics can be posted to a shared evaluation space, allowing research collaborators baseline data from which to expand or focus their investigation.

4. Gathering User Feedback

This section focuses on gathering feedback from users and expert

developers of digital image collections. The "tried and true" methods

of obtaining direct input include survey questionnaires, personal

interviews, and focus groups. The Internet now allows the migration

of these traditional methods to a networked environment. Using the

networked approach allows investigators to gather information from a

distributed community of users, and to promote dialogue among users

by modifying traditional focus group techniques.

Expert developers are usually best recruited through existing contacts and asked for their opinions through email, phone interviews, site visits or focus group sessions. There are also fee-based review services available, such as Sitescreen, SiteInspector, and IBRSystem, which offer a fast and efficient method of getting feedback. Experts can also participate in the same evaluation activities as users; however their opinions are usually isolated in the analysis.

End users are best recruited for testing using a variety of overlapping appeals, such as a general announcement and news story to the intended audience followed by a direct appeal to individuals through email or personal letters, or through a gatekeeper such as a teacher. An incentive to participate, such as extra credit, free printing, or a cash or gift drawing, no matter how small, is essential.

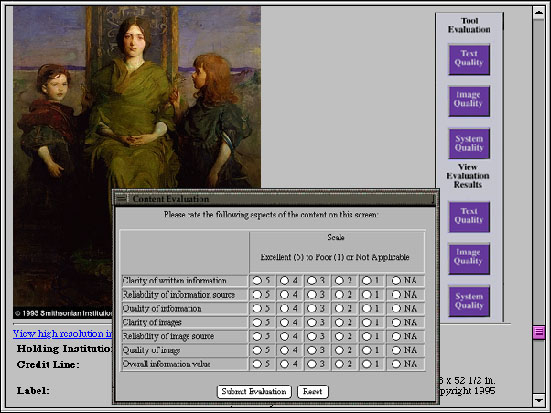

Using JavaScript and dynamic HTML evaluators can create unique response methods designed to ease the feedback process for users and to create a more naturalistic environment for users to view media. For example, "onmouseover" commands in HTML allow inquirers to embed survey questions into a Web document with their appearance in a floating window triggered by one or a combination of mouse or timing actions. If a user dragged a mouse over a particular graphic element or other object, this may trigger the opening of a question box. The trigger elements may or may not be visible to the user. Another example is to have a question box appear on a timed basis, when a user "mouses over" a certain section of the page, or after data have been entered. A sample dialog box designed by the HCI Group to solicit user ratings to a prototype image database is presented below as Figure 2. Solicitations for open-ended feedback could also be incorporated using a similar dialog box. The authors occasionally use this type of tool in lab research on user interfaces.

There are also several Web annotation software packages, such as Hot Off the Web, that allow users to make annotations directly on Web-like documents. Any URL can be accessed via the custom browser. The program then presents the page as a single snapshot; hyperlinks and other HTML or Javascript codes become inactive. Reviewers are then able to post comments and other annotations using small "post-it" style notes, stickers, highlight pens, and drawing tools. The marked-up page can then be saved as HTML with all annotations saved in the same folder. These pages can then be served for distributed review or viewed from the desktop.

5. Integration: A Case for Synchronous Data Collection,

Analysis, and Reporting

As previously noted, asynchronous evaluations generally involve

gathering user's opinions after-the-fact. Findings are then compiled

and reported in a formal and often time-consuming process.

Synchronous approaches employ extensive use of networked technologies

both to collect data and to report findings. The authors find that

this technique offers several advantages over asynchronous methods,

including a promise for better integration of the evaluation and

design process. The same tools used to distribute the information can

be used to improve the design of that information. Users are able to

provide feedback as they use the system, allowing designers to take

their evaluations into account in an iterative design process.

Synchronous efforts attempt to place evaluation in the context of

actual usage. Consider, for example, having Web site evaluation

activities a click or two away - or even open alongside - a Web

product. Although users can certainly complete a paper questionnaire

next to a computer screen, the need to switch between modes may be

annoying and/or diminish the user's sense of context. Another

advantage is that synchronous evaluations can improve response rates

because of convenience, respondent motivation, and a greater sense of

relevance and context.

Conclusion

Frequently there are as many opinions about the goals of

evaluation activities as there are sponsors and developers of a

digital imaging project. Evaluations can include one or a combination

of components, such as a needs assessment (formative evaluation), a

description of the current iteration, or a measurement of success in

reaching project objectives (summative evaluation). Regardless of

goal, valid and reliable data collection methods are at the heart of

all evaluations and, as previously stressed, no single tool or

technique can accurately represent an entire project.

Just as delivery technologies grow more and more sophisticated, so too are technologies for assisting in evaluation. User logging and analysis used to be a monumental effort, even in multimedia systems. Now logging and analysis for basic Web site functions can be handled efficiently using inexpensive software. The difficulties remain, however, in those areas involving the gathering of accurate and representative responses from users. Communities of researchers and developers continue to search for better ways to measure user behaviors and opinions.

1. Darnton, Robert. "The New Age of the Book," The New York Review of Books, March 18, 1999, http://www.nybooks.com/nyrev/index.html.

2. For examples of the digital imaging initiative evaluation study

reports written by the HCI Group, see:

Gay, G.; Rieger, R.; and Sturgill A. Findings of the MESL Casual

User Survey,

http://www.ahip.getty.edu/mesl/reports/mesl_ddi_98/p5_05.1_rieg.html

Kilker, J.; Gay, G. "The Social Construction of a Digital Library: A

Case Study Examining Implications for Evaluation," Information

Technology and Libraries, 1998, vol. 17, no. 2, pp. 60-69.

J. Takahashi, et. al. "Global Digital Museum: Multimedia Information

Access and Creation on the Internet," Proceedings of the ACM

Digital Libraries, 1998, Pittsburgh, PA, pp.

244-253.

3. Marchionini, Gary; Plaisant, Catherine. User Interface for the National Digital Library Program: Needs Assessment Report, College Park, Maryland: Human-Computer Interaction Laboratory, University of Maryland at College Park, 1996.

4. Kilker, J.; Gay, G. "The Social Construction of a Digital Library: A Case Study Examining Implications For Evaluation," Information Technology and Libraries, 1998, vol. 17, no. 2, pp. 60-69.

5. Greene, J.C.; McClintock, C. "Triangulation in Evaluation: Design and Analysis Issues," Evaluation Review, 1985, vol. 9, pp. 523-545.

6. Geertz, C. "Thick Description: Toward an Interpretative Theory of Culture," in C. Geertz, The Interpretation of Cultures. New York: Basic Books, 1973, pp. 3-30.

|

Highlighted Web Site

|

Calendar of Events

Fourth ACM Digital

Libraries Conference (DL '99)

August 11-14, 1999

To be held in Berkeley, CA, the Association for Computing

Machinery (ACM) will sponsor this forum on digital libraries, with an

expanded program for the presentation of new research results, the

discussion of policy issues, and for the demonstration of new systems

and techniques.

Preserving

Photographs in a Digital World

August 14-19, 1999

This annual seminar held in Rochester, NY, is sponsored by the Image

Permanence Institute and the George Eastman House/International

Museum of Photography and Film. The seminar focuses on all aspects of

photographic conservation from traditional photo collection

preservation techniques to the digital imaging of photographs.

Participants will learn about the basics of digital imaging and

explore various image capture, storage, display, and output

strategies.

Creating

Electronic Texts and Images

August 15 - 20, 1999

This workshop is being held at the University of New Brunswick,

Canada, and is a practical exploration of the research, preservation,

and pedagogical uses of electronic texts and images in the

humanities. The instructor is David Seaman from the University of

Virginia.

Rethinking Cultural

Publications: Digital, Multimedia, and Other 21st Century

Strategies

September 15 - 17, 1999

This conference is presented by the Northeast Document

Conservation Center (NEDCC), and sponsored by the National Park

Service Museum Management Program. It will be held in Washington,

D.C. Attendees will learn the basics of how to prepare digital,

multi-media and paper publications on cultural collections for

museums, archives, libraries, centers, and other historic

preservation resources.

Digitisation

of European Cultural Heritage: Products-Principles-Techniques

October 21-23, 1999

This symposium is organized by the Institute for Information

Science, Utrecht, The Netherlands, and will focus on successful

digitisation projects from various European countries. The morning

sessions will be devoted to plenary papers. In the afternoon

sessions, participants will have a chance to see demonstrations of

various European projects, and will be able to try the applications

themselves.

Announcements

Consortium Formed for the

Maintenance of the Text Encoding Initiative

A new consortium has been formed for continuing the work of the Text

Encoding Initiative (TEI). The TEI is an international project

focussing on developing guidelines for the encoding of textual

material in electronic form for research purposes. Four universities

have agreed to serve as the hosts, and the three organizations which

founded and supported the TEI, have agreed to transfer the

responsibility for maintaining and revising the TEI Guidelines to the

new consortium. The institutions include: University of Bergen,

Norway (Humanities Information Technologies Research Programme);

University of Virginia (Electronic Text Center and Institute for

Advanced Technology in the Humanities); Oxford University (Computing

Services); and Brown University (Scholarly Technology Group).

National

Initiative for a Networked Cultural Heritage (NINCH): Request For

Proposals

The National Initiative for a Networked Cultural Heritage is

undertaking a project to review and evaluate current practice in the

digital networking of cultural heritage resources in order to publish

a Guide to Good Practice in the Digital Representation and Management

of Cultural Heritage Materials. The Working Group has issued an RFP

to hire a consultant to conduct the survey and to write the guide.

Grant

Winners Announced for Final Library of Congress-Ameritech

Competition

The final year of grants have been awarded to six institutions.

Information about the winners and the funded projects is available on

the Web site.

Digitising

History: A Guide to Creating Digital Resources from Historical

Documents

This Web publication has been commissioned by the History Data

Service (HDS) as part of the Arts and Humanities Data Service (AHDS)

publication series, Guides to Good Practice in the Creation and

Use of Digital Resources. The guide includes a glossary and

bibliography of recommended readings, and includes guidance about

choosing appropriate data formats and ensuring that a digital

resource can be preserved without significant information loss.

Archeology

Data Service: Survey of User Needs of Digital Data

The United Kingdom's Archeology Data Service has released a report on

its survey of the needs of users of digital data in archeology. There

is a section that focuses on digital archiving, and has training

recommendations that has implications for digital preservation.

The

Higher Education Digitisation Service (HEDS): Feasibility Study for

the JISC Image Digitisation Initiative

The Joint Information Systems Committee (JISC) Image Digitisation

Initiative is digitizing sixteen different image collections as a

step towards building, with other JISC-funded digital image

libraries, a coherent digital image resource for Higher Education in

the United Kingdom. This report contains solutions to a variety of

challenges in digitizing and offers guidance to those planning image

digitization projects.

The Virginia Digital Library

Program

The Library of Virginia initiated the Virginia Digital Library

Program (VDLP) grant program in 1998 to provide consulting services,

funding, and implementation for local Virginia libraries to develop

and disseminate digitization projects. It is anticipated that the results of Phase I will be available on the Web site in the late summer. (clarification note added on 6/17/99: original text stated the report was available on 6/15/99.) These projects represent more than 1,000

pages of text, 12,000 photographic images, 60,000 card images, and

20,000 database records, and includes local newspaper indexes, maps,

indexes to cemetery interment records, indexes to diaries and

journals, ancestor charts, and numerous local photograph collections.

FAQs

Question:

I have heard of a consortia activity that attempts to develop

metadata standards for digital images. Do you know of such an

initiative?

Answer:

You may be referring to the Technical Metadata Elements for Image

Files Workshop that was held in April 18-19, 1999 in Washington DC.

This one-day invitational workshop was sponsored by the National

Information Standards Organization (NISO), Council on Library and

Information Resources (CLIR), and the Research Libraries Group, Inc.

(RLG). The primary goal of the meeting was to discuss a comprehensive

set of technical metadata elements that should be required in the

documentation of digital images. This workshop concentrated on

defining the range of technical information needed to manage and use

digital still images that reproduce a variety of pictures, documents

and artifacts, with a focus on raster images. The workshop

participants divided into three groups for concurrent breakout

sessions to discuss and define technical metadata for:

The workshop Web site includes further information about the goals of this meeting, and provides links to other image metadata-related initiatives and resources. The image workshop report summarizing the discussion that took place during the workshop is also available on this site.

RLG News

Aspects of Digital Preservation and Archiving Forum

"Aspects of Digital Preservation and Archiving", the RLG Forum hosted

by the Emory University Libraries, took place on May 20-21, with

seventy-five attendees. The forum provided an opportunity for RLG

members to learn how institutions address issues such as unstable

digital media, format obsolescence, migration and back-up, policies,

access, proprietary software, and digital preservation requirements.

A more detailed write-up will appear in the August 1999 issue of the

RLG Focus.

Words of advice, wisdom, revelations, and warnings from the speakers and attendees:

Speakers included: Jimmy Adair, ATLA Center for Electronic Texts in Religion; Robin Dale, RLG; Billy E. Frye, Emory University; Edward Gaynor, University of Virginia; Joan Gotwals, Emory University Libraries; Martin Halbert, Emory University Libraries; Alice Hickcox, Emory University Libraries; Erich Kesse, University of Florida; Paul Mangiafico, Duke University; Sue Marsh, RLG; Janice Mohlhenrich, Emory University Libraries; Patrick Moriarty, Emory University Library; Naomi Nelson, Emory University Libraries; Linda Tadic, University of Georgia

e18, the Eighteenth Century Digitization Project

RLG has joined with the British Library, the English Short Title Catalogue (ESTC) North America, and Primary Source Media (PSM) to develop "e18," the Eighteenth Century Digitization Project.

Within the e18 project site, sample ESTC records from several different collections are linked to digitized page images, including Eighteenth Century Broadsides, the Gothic Novel, and Women Writers of the Eighteenth Century.

A unique feature of the Eighteenth Century Digitization Project is its online user survey. Survey responses from ESTC users who visit the demonstration site will be used to guide the further development of the project. Through the online survey, ESTC users can issue requests to digitize sets of materials currently available in Primary Source Media's "Eighteenth Century" microfilm project.

Access to the Eighteenth Century Digitization Project demonstration and survey is made available to ESTC users through RLG's Eureka on the Web, or the e18 site.

Hotlinks Included in This Issue

Feature Article

Cedars: http://www.leeds.ac.uk/cedars/

eLib: http://www.ukoln.ac.uk/services/elib/

OAIS

reference model:

http://ssdoo.gsfc.nasa.gov/nost/isoas/ref_model.html

Technical Feature

American Evaluation

Association: http://www.eval.org/

Center for Social Research

Methods: http://trochim.human.cornell.edu

Hot Off the Web:

http://www.hotofftheweb.com/

Human-Computer Interaction

(HCI) Group: http://www.hci.cornell.edu/

IBRSystem:

http://www.ibrsystem.com/

Logdoor:

http://www2.opendoor.com/logdoor/

ScreenCam:

http://www.lotus.com/home.nsf/welcome/screencam

SiteInspector:

http://siteinspector.linkexchange.com/

Sitescreen:

http://www.sitescreen.com/

SnagIt:

http://www.snagit.com/

Surf Report:

http://www.bienlogic.com/SurfReport/

The

Tavistock Institute:

http://www.ukoln.ac.uk/services/elib/papers/tavistock/evaluation-guide/

Web Manage

(Net Intellect):

http://www.netintellect.com/netintellect30.html

WWW Stat:

http://www.ics.uci.edu/pub/websoft/wwwstat/

Zoom

Project: http://now.cs.berkeley.edu/Td/October/COMPLETE.HTM

Highlighted Web Sites

Lyson Ink

Longevity Chart from Wilhelm Research on IAFADP Web Site:

http://www.iafadp.org/technical/inklife.html

Calendar of Events

Creating

Electronic Texts and Images:

http://www.hil.unb.ca/Texts/SGML_course/Aug99/

Digitisation

of European Cultural Heritage: Products-Principles-Techniques:

http://candl.let.uu.nl/events/dech/dech-main.htm

Fourth ACM Digital Libraries

Conference (DL '99): http://fox.cs.vt.edu/DL99/

Preserving

Photographs in a Digital World:

http://www.rit.edu/~661www1/FRAMESET.html

Rethinking Cultural

Publications: Digital, Multimedia, and other 21st Century

Strategies: http://www.nedcc.org/rethink.htm

Announcements

Archeology

Data Service: Survey of User Needs of Digital Data:

http://ads.ahds.ac.uk/project/strategies/

Consortium Formed for the Maintenance

of the Text Encoding Initiative: http://www.tei-c.org/

Digitising

History: A Guide to Creating Digital Resources from Historical

Documents:

http://hds.essex.ac.uk/g2gp/digitising_history/index.html

Grant

Winners Announced for Final Library of Congress-Ameritech

Competition:

http://memory.loc.gov/ammem/award/99award/award99.html

The Higher

Education Digitisation Service (HEDS): Feasibility Study for the JISC

Image Digitisation Initiative:

http://heds.herts.ac.uk/Guidance/JIDI_fs.html

National

Initiative for a Networked Cultural Heritage (NINCH) : Request For

Proposals: http://www.ninch.org/PROJECTS/practice/rfprfp.html

The Virginia Digital Library

Program: http://image.vtls.com

FAQs

Technical Metadata

Elements for Image Files Workshop: http://www.niso.org/image.html

RLG News

British

Library: http://www.bl.uk/collections/epc/hoshort.html

e18 site:

http://www.e18.psmedia.com/index.html

English Short Title

Catalogue (ESTC) North America:

http://cbsr26.ucr.edu/estcmain.html

Eureka on the Web:

http://www.rlg.org/eurekaweb.html

Primary Source

Media (PSM): http://www.psmedia.com/site/index.html

![]()

Publishing Information

RLG DigiNews (ISSN 1093-5371) is a newsletter conceived by the members of the Research Libraries Group's PRESERV community. Funded in part by the Council on Library and Information Resources (CLIR), it is available internationally via the RLG PRESERV Web site (http://www.rlg.org/preserv/). It will be published six times in 1999. Materials contained in RLG DigiNews are subject to copyright and other proprietary rights. Permission is hereby given for the material in RLG DigiNews to be used for research purposes or private study. RLG asks that you observe the following conditions: Please cite the individual author and RLG DigiNews (please cite URL of the article) when using the material; please contact Jennifer Hartzell (jlh@notes.rlg.org), RLG Corporate Communications, when citing RLG DigiNews.

Any use other than for research or private study of these materials requires prior written authorization from RLG, Inc. and/or the author of the article.

RLG DigiNews is produced for the Research Libraries Group, Inc. (RLG) by the staff of the Department of Preservation and Conservation, Cornell University Library. Co-Editors, Anne R. Kenney and Oya Y. Rieger; Production Editor, Barbara Berger; Associate Editor, Robin Dale (RLG); Technical Support, Allen Quirk.

All links in this issue were confirmed accurate as of June 10, 1999.

Please send your comments and questions to preservation@cornell.edu .

![]()