Table of Contents

Editors' Note

In this issue we present Steve Puglia's feature article on costs of digitization and also announce the availability of three new reports from the U.K. and Canada that present cost figures associated with digital collection building. As you will see, actual and estimated expenses can vary dramatically from one activity to the next and between projects and programs. Obviously these studies do not answer conclusively the question of how much is it going to cost, but they do provide an initial assessment of the information currently available. For instance, there is growing evidence that the scanning step may represent a relatively small percentage of the overall costs associated with digital programs. We hope these reports will encourage others to confirm, challenge, or offer their own cost information, and to take a more critical look at the financial reality behind digitization initiatives. We welcome suggestions for future articles - or letters to the editors - on this topic.

Feature Article

The Costs of Digital Imaging Projects (1)

By Steven Puglia, National Archives and Records Administration

steven.puglia@arch2.nara.gov

Introduction

When planning and budgeting for a digital imaging project there

are many components that need to be considered, including the

following:

The last category - long-term maintenance of the digital images and associated metadata - is often not considered as part of project costs, but falls to institutions to absorb, so it is best to plan for the on-going costs from the beginning of the project. A publication that is helpful in planning and budgeting for the various costs of digital imaging projects is the "RLG Worksheet for Estimating Digital Reformatting Costs." (2) A recent cost study that provides insight into a number of cost issues associated with digital imaging projects is The Cost of Digital Image Distribution: The Social and Economic Implications of the Production, Distribution and Usage of Image Data, by Howard Besser and Robert Yamashita. This Museum Educational Site Licensing (MESL) project report covers costs associated with creating and distributing digital images for art sources, but many of the points relating to costs are likely to be applicable to other types of digital imaging projects. The authors are skeptical about the costs for developing and maintaining digital image collections decreasing over time, and conclude that digital access is not likely to be cost effective anytime soon.

Analyzing Digitization Costs

What are digital imaging projects really going to cost? Each

project will be different, but we are starting to gather data that

can be used as a reference to determine whether specific project

costs are "in the ballpark." Tables 1-5 present both estimated and

actual costs and percentages from a number of different projects.

Note: The average overall costs, the individual average costs, and

the individual average percentages were calculated from slightly

different data sets, as not all costs were available for every

project. Therefore, the individual average costs add up to more than

the average total costs and the individual percentages add up to more

than 100%. The "adjusted averages" do not include individual project

costs that significantly exceeded the average and were outside the

normal distribution using a frequency histogram. The costs shown in

brackets, [$ ], are the total of the individual average costs. The

percentages shown in brackets, [ %], are normalized to 100%. Overall

and adjusted average figures are derived from a number of sources,

including the Library of Congress' National Digital Library/Ameritech

Competition, Round One, (1996) and Three (1999); the National Archives and Records

Administration's Electronic Access Project (NARA-EAP), completed in

April 1999; and from other projects and published sources.

|

Processes |

|

|

|

|

|

Digitizing |

|

|

|

|

|

Metadata Creation |

|

|

|

|

|

Other (4) |

|

|

|

|

|

Totals |

|

|

|

|

|

Images/Day (5) |

|

|

|

|

Although the actual range of numbers can vary significantly, it appears that, in general, production figures break down into the following:

Some published estimated costs for digitizing textual records as 1-bit files (most appropriate for documents with clean, printed type) include: (6)

The cost for digitizing and for on-going maintenance is going to be proportional to the amount of data, therefore, larger image files will cost more to produce and to maintain. The following compares file size to digitizing costs:

|

Processes |

(per item) (7) |

(per page) (8) |

tions (per photo) |

(per page) |

|

Digitizing |

|

|

|

|

|

Metadata Creation |

|

|

|

|

|

Other |

|

|

|

|

|

Totals |

|

|

|

|

|

Processes |

(per item) |

(per page) |

tions (per photo) |

(per page) |

|

Digitizing |

|

|

|

|

|

Metadata Creation |

|

|

|

|

|

Other |

|

|

|

|

|

Totals |

|

|

|

|

In addition to creating digital images, a number of text-based digitization projects are producing searchable text, either by re-keying the information or by using Optical Character Recognition (OCR) software (average OCR costs include a mix of projects using both raw OCR and corrected data). Table 4 presents reported costs for the two approaches.

|

Processes |

Text (per page) |

Text (per page) |

(per page) |

(per page) |

|

Digitizing (9) |

|

|

|

|

|

Metadata Creation |

|

|

|

|

|

Other |

|

|

|

|

|

Totals |

|

|

|

|

Average cost figures may be helpful to institutions in evaluating cost projections, but very few projects in real life will be the average, and costs at the extremes of the ranges may be legitimate costs. Table 5 presents the cost ranges reported by a number of institutions for the various steps in the digitization process. As can be seen, actual and estimated costs can range considerably.

|

Digitization Category |

Digitizing |

Metadata Creation |

Other |

Overall Costs |

|

Overall Projections |

$0.25-$19.80 |

$0.75-$34.65 |

$0.45-$50.20 |

$1.85-$96.45 |

|

Adjusted Projections |

$0.25-$16.65 |

$0.75-$17.25 |

$0.45-$28.15 |

$1.85-$42.45 |

|

Mixed Collections |

$3.45-$16.50 |

$2.85-$17.25 |

$4.50-$21.55 |

$3.25-$40.50 |

|

Single Items |

$1.90-$8.00 |

$5.75-$12.85 |

$7.60-$28.15 |

$23.10-$35.80 |

|

Photographs |

$2.30-$16.65 |

$4.85-$6.45 |

$3.35-$24.65 |

$5.20-$42.45 |

|

Books/Pamphlets |

$2.10-$6.10 |

$1.50-$11.10 |

$1.35-$6.90 |

$4.60-$14.40 |

|

Re-keyed Text |

$2.55-$5.00 |

$2.35-$5.70 |

Limited Data |

Limited Data |

|

OCR |

$0.25-$3.60 |

$0.75-$2.40 |

$0.40-$2.10 |

$1.85-$7.65 |

Table 6 presents an overview of the relative cost ratings per category for digitization for various types of material, including the creation of searchable text files. Simon Tanner and Joanne Lomax Smith of the Higher Education Digitization Service (HEDS) in the UK have taken a similar approach to cost assessment using different parameters in a paper for the 1999 Digital Resources for the Humanities Conference. The authors created "The HEDS Matrix of Potential Cost Factors," (10) which compares relative costs for various aspects of digitization from scanning specifications, to steps in the digitization process, to resulting file sizes.

|

Digitization Category |

Digitizing |

Metadata Creation |

Other |

Overall Costs |

|

Mixed Collections |

Higher |

Slightly Higher |

Lower |

Slightly Higher |

|

Single Items |

Lower |

Slightly Higher |

Higher |

Higher |

|

Photographs |

Slightly Higher |

Lower |

Average |

Lower |

|

Books/Pamphlets |

Lower |

Lower |

Lower |

Lower |

|

Re-keyed Text |

Lower |

Lower |

Lower |

Lower |

|

OCR |

Very Low |

Very Low |

Very Low |

Very Low |

Ongoing and Maintenance Costs

Only a few models for estimating the costs for maintaining

digital images and data have been published, and they vary

considerably. In 1996, Charles Lowry and Denise Troll estimated that

digital files would be sixteen times more expensive to maintain and

access than their paper counterparts. (11) At

least one Federal government study, from the Environmental Protection

Agency, indicated that the cost to install, staff, and maintain

network infrastructure and the digital data for 1st 10 years is up to

5 times the initial investment. (12).

Two years ago, the cost to maintain the master image files (off-line) and access files (on-line) for NARA-EAP during the first ten years was estimated at 50% to 100% of initial investment. The budget covered the following:

Digitizing

$940,000

Network Upgrades

$800,000

Custom database development

$2.5 million

The cost for the database development involved custom programming, and cost over 2.5 times more than the amount spent on digitizing images, and the cost for upgrading the file servers to accommodate the on-line images was almost as much as the digitizing. Often major IT infrastructure costs are budgeted separately from digitizing projects, and therefore the network upgrade and database development costs were not factored into the estimates for long-term maintenance for the digital images. If the infrastructure costs are included, the estimates cited below will be substantially higher.

A 1999 estimate for maintaining the EAP images for the next ten years was predicated on the following:

These two costs together represent $1.70 to $4.70 per image for the first 10 years, or 14% to 38% of the initial cost per image ($211,000 to $583,000).

A second approach would be to use the cost model presented in the Cornell report on computer output microfilm, which reports maintenance estimates from several studies in the range of $0.10-$0.11/Mb per year. The estimate to maintain the NARA-EAP files would be $9.65 to $10.62 per image for the first 10 years, for a total of $1.2 million - $1.3 million. This would represent 55% to 60% of the initial cost per image.

A third approach would be to pay a commercial service to maintain the digital images and associated metadata. One proposed business model for commercial service for file maintenance is:

Back-up storage

62%

Amortization of disk storage

27%

Hardware maintenance

11%

This assumes maintenance of multiple copies of files for security and data recovery. The largest percentage of the maintenance cost will be labor. So even though digital storage costs will continue to decrease (at an estimated rate of 37.5%/year), the overall costs will continue to increase. Using these percentages, we can derive a third estimate based on use of a commercial service of $13.60 to $39.40 per image for the first ten years, representing 77% to 224% of the initial cost per image ($1.7 million - $4.9 million). If digital storage costs did not decline, the figures would be $48.30 to $140.00 per image for the first 10 years - or 275% to 796% of initial cost per image ($6 million - $17.4 million).

Considering the wide disparity in cost projections for maintaining digital images and associated data, planning for 50% to 100% of initial cost per image for maintenance for the first ten years still seems reasonable since it is in the middle of the range of projections. This works out to 5% to 10% of initial cost per image per year, although file copying and conversion are not done on a yearly basis.

A compelling case can be made for digital imaging to facilitate access and improve business process, but it may not be the most appropriate approach for long-term retention of information. In a 1998 review by the Association of Image and Information Management (AIIM) of ten technologies that will affect document management over the next 2 years, number five was "replacement of optical disk systems with COM microfiche" by companies that are finding the cost of digital maintenance prohibitive. (13)

Conclusion

This presentation of cost findings from digital imaging projects

leads to the following conclusions:

Additional research and data analysis needs to be done, including the following:

1. Adapted from a paper presented at the Electronic Media Group meeting during the American Institute for Conservation Annual Meeting in St. Louis, MO, June 1999. The author acknowledges the contributions of Dan Jansen, Carl Fleischhauer, and Steve Chapman.

2. "RLG Worksheet for Estimating Digital Reformatting Costs," http://www.rlg.org/preserv/RLGWorksheet.pdf.

3. Image acquisition costs included creation of the master, access, and thumbnail files, file header and tracking data, and storage on primary and backup media.

4. Includes identifying and preparing materials, monitoring, quality control, and project management.

5. Average number of images per day over the life of the project. Certain aspects of the project, such as digitizing, are probably done in a shorter time period with a higher production rate.

6. From Anne R. Kenney, Digital to Microfilm Conversion: A Demonstration Project, 1994-1996, http://www.library.cornell.edu/preservation/com/comfin.html.

7. Includes a mix of text, photographs, and other materials; costs per item.

8. Individual items such as manuscript collections.

9. For re-keying, digitization is the re-keying of text; for Optical Character Recognition (OCR), digitization includes scanning images first and then OCR.

10. Simon Tanner and Joanne Lomax Smith, "Digitisation: How Much Does it Really Cost?" September 1999, p.7. http://heds.herts.ac.uk/HEDCinfo/Papers/drh99.pdf.

11. Charles Lowry and Denise Troll, "Virtual Library Project," Serials Librarian, "NASIG Proceedings: Tradition, Technology, and Transformation," Part 1, Vol. 28, Nos 1 / 2 , 1996.

12. "EPA Superfund Document Management System Concept," 1991.

13. "Put It Here- AIIM 1999: Storage Summary," INFORM, Vol. 13, Issue 6, June 1999.

Technical Feature

Image Capture Beyond 24-Bit RGB

Donald S. Brown, Imaging Systems Engineer, Imaging Science

Division, Eastman Kodak Company

dbrown@kodak.com

Introduction

The human visual system can distinguish roughly 10 million

distinct colors. A 24-bit color image (8 bits per color) can encode

over 16 million color values. Why would it ever be necessary to

capture an image using 30-, 36-, or 48-bit color? And, why use more

than three-color channels? The answer is found in the details of how

images are captured, displayed, and viewed.

Any practical color-reproduction system (photography, television, lithography) relies on the trichromatic nature of the human visual system (HVS). The eye has three kinds of color sensors, called cones. As a result, only three primary colors (red, green, and blue) are needed to excite these cones in different combinations to create those 10 or so million colors. However, having only three receptors means the HVS cannot sense the individual contributions each wavelength of a particular color stimuli make, only the aggregate effect. As a consequence, two colors made of physically different materials, and thus having different spectral composition, can look identical, depending on the illumination and viewing conditions. (1).

Three color channels (RGB) are sufficient to encode all hues, but are the 256 levels (8 bits) in each channel of a 24-bit image adequate? Theoretical calculations and practical systems suggest the right 256 levels can create very high-quality reproductions, depending on the quality of those 8 bits and the type of output. (2) Greater bit depth is unquestionably needed at capture to achieve 256 useful levels in each color channel, for reasons described below.

Digital Image Capture

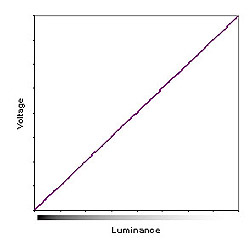

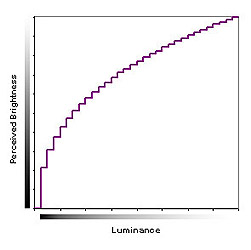

For simplicity, Figure 1 illustrates a monochrome grayscale; however,

the same concept applies to each channel in an RGB image. Most

digital images are captured using a CCD (charge-coupled device). CCDs

are actually analog devices, in that the voltage output for each

picture element on a CCD varies continuously in proportion to the

intensity of the light striking it (Figure 1a).

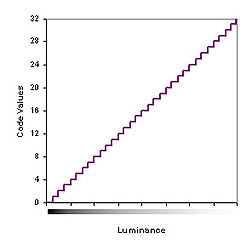

(3) The digitization happens in the

analog-to-digital (A/D) converter. A/D converters take the CCD-output

voltage range and divide it into a discrete number of levels (e.g., a

5-bit A/D converter has 32 levels or code values). The conversion

from voltage to code value is also proportional, or linear (Figure

1b). Therefore, the difference from one code value to the next

represents an equal increment in the light intensity as seen by the

sensor.

|

|

|

|

|

|

|

Here is the catch. The human visual system does not see equal changes in light intensity as equal changes in perceived brightness. The response is nonlinear, something more like a power function as shown in Figure 1c. Figure 2 illustrates an image as seen by the sensor (top) and by the HVS (bottom).

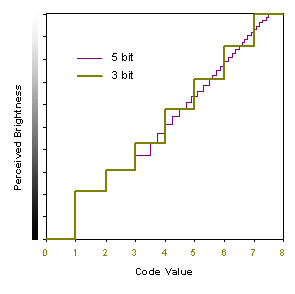

In visual terms, the A/D converter has assigned too many code values to light colors and possibly too few to dark ones as evidenced by the loss of detail in shadows (Figure 2). Scanners and digital cameras solve this problem by over-assigning bit values throughout an image to improve dark sections, which results in too many values in the light areas. Keeping all the visually redundant code values around would require more memory, larger file sizes, and slower image processing. Typically, capture systems will reduce the bit depth (from 12 bits to 8 bits, for example) by choosing fewer levels, but levels that represent more visually equal increments. Figure 3 demonstrates the idea by reducing the 5-bit (32-level) linear data to 3-bit (8-level) data with roughly equal visual increments. This step picks the best 8 values from the 32 levels available. If too few bits are used to begin with, the resulting image may show quantization artifacts (posterization), which is manifested by the appearance of visible tonal steps (Figure 4).

|

|

High-end scanners and digital cameras use 12-, 14-, or 16-bit A/D converters, and 12 bits per record (36-bit color) is probably the minimum for high-quality work. (4) Within the scanner software, the linear data can be modified by a variety of automatic and manual adjustments, which will include a reshaping of the data to a more visually acceptable scale. The data is then delivered as 8 bits per channel, ready for display, printing, etc. It is more common now for scanners to provide access to the higher bit-depth data directly. (5) And, improved 16-bit-per-color capabilities in Adobe Photoshop software version 5.0 make this data easier to manipulate. Also, the International Color Consortium (ICC) color management system is compatible with 12- and 16-bit-per-color images. It is always an advantage to store higher bit-depth data to ensure the greatest flexibility in subsequent processing for various purposes; however, more storage resources are required.

Bit depth is only part of the picture. The number of bits per color used in a particular scanner or digital camera says nothing about dynamic range or noise. The recorded range from lightest white to darkest black of any two scanners can vary greatly, even though both might use the same number of bits per channel. And, simply putting a 16-bit A/D converter in a scanner does not prevent noise from reducing the useful number of levels to 12 bits or fewer. To decrease noise, increase dynamic range, and improve image quality, there is no substitute for high-quality components and for techniques such as cooling the sensor and time delay and integration (TDI). Published scanner specifications should be read like the marketing documents they are. Even clear-cut specifications such as bit depth have been subject to questionable interpretation. (6) Scanner and digital camera specifications are an indication of quality, but the full story is known only when real images are captured and evaluated.

Multispectral Imaging

Obviously, capturing good data in each color channel is very

important, but since the HSV is trichromatic, what is the motivation

for multispectral imaging, which is based on capturing more than

three channels? The answer is more accurate color.

It should be noted that multispectral imaging also includes systems that obtain information from parts of the spectrum beyond the visual region. For instance, remote-sensing systems have infrared channels to supplement or enhance data captured in the visual spectrum. Only systems in pursuit of more faithful color capture are discussed here.

Given identical viewing conditions, a colorimetric scanner, such as IBM Pro/3000, would essentially see the world like the HVS. Commercial scanners generally do not have such responses because of practical design considerations. Instead, a color-correction step involving look-up tables, matrices, or 3-D look-up tables is applied to the scanner data to improve color matching performance. Unfortunately, color correction, or calibration, provides only a partial solution. The correction is only valid for the particular light source and media type for which it was derived. In many cases, this is perfectly acceptable. However, even small changes, such as dye-set differences from one generation of color film or paper to another, technically require different color corrections.

For high-fidelity color, three-channel capture is limited, especially in the case of original artwork created with multiple pigments. It is not surprising, then, that multispectral capture has been applied in the direct capture of fine artwork. Of particular note is the VASARI project (Visual Arts System for Archiving and Retrieval of Images) (7), which has produced systems in association with The National Gallery, London and The Uffizi Gallery, Florence. Multispectral systems in these applications are designed to more accurately estimate CIE tristimulus values (which characterize the HVS trichromatic response) or, better yet, estimate an entire spectral reconstruction for each pixel. Full spectral reconstruction has the advantage of allowing differences in illumination between the capture and display to be corrected. Calibration of such systems will be much less dependent on using the same or similar materials in both the object and the calibration target. Systems have been constructed or proposed that use from five to sixteen separate channels. (8) The number of channels and their spectral characteristics, as well as methods to regress spectral or colorimetric data, are topics of current research.

Multispectral systems are very computationally intensive because of the greater amount of information to process, and the algorithmic conversions to spectral or colorimetric data. It should be noted that the same concerns about bit depth in three-color imaging apply, and that there seems to be a consensus that 12-bit-per-channel capture is sufficient. (9) Though spectrally reconstructed data is more complete, file size is very large. Colorimetric data, on the other hand, can be stored in three 8-bit channels. To retain greater color precision, a 32-bit (10 bit L*, 11 bit a*, 11 bit b*) image has been used.

Conclusion

Greater bit depth (12-bits or more per channel) is necessary to

achieve an appropriate 8-bit-per-channel image for display. However,

different 24-bit images are required for different output options, so

it is desirable to retain the higher bit-depth image for multiple

purposes. Of course, retaining high-bit depth images puts a

significant burden on storage resources. Additionally, there are

still few file formats and compression options for images with

greater than 8 bits per channel.

Three-color capture in most all cases requires a color-correction step to measure colorimetric values. The validity of such a color correction is limited to the specific set of colorants for which it was derived. Multispectral imaging is a way to overcome these limitations. Colorimetric fidelity, however, does not guarantee perfect reproductions because gamut limitations of the output media may, in some cases, cause greater harm than in-gamut color errors.

By their nature, multispectral imaging systems process a greater amount of data than an RGB system, and currently require custom tools and less-common file formats. Images produced by multispectral systems are generally not directly viewable, rather, they serve as master images from which derivatives are produced for display or printing. The same is often true for higher bit-depth images. Multispectral systems for precise color capture are still in the realm of custom, research-oriented devices. As digital imaging becomes more powerful and less expensive, multispectral systems may become more common.

Footnotes

1. The effect of viewing environment, including flare and viewer

adaptation can be very significant. An excellent explanation of this

topic is given in: Edward J. Giorgianni and Thomas E. Madden,

Digital Color Management (Reading, MA: Addison-Wesley, 1998),

14.

2. R.W.G. Hunt, "Bits, Bytes, and Square Meals in Digital Imaging," Proceedings of IS&T/SID Fifth Color Imaging Conference: Color Science, Systems, and Applications. (1997): 1-5; Shin Ohno, "Color in Digital Photography, Color Quality of Digital Photography Prints," Proceedings of IS&T/SID Fifth Color Imaging Conference: Color Science, Systems, and Applications. (1997): 100-104; Fred Mintzer, "Developing Digital Libraries of Cultural Content for Internet Access," IEEE Communications Magazine v37 (Jan 1999): 72-78.

3. Gerald C. Holst, CCD Arrays, Cameras and Displays, 2nd ed. (Bellingham, WA: SPIE Optical Engineering Press, 1998), 78.

4. John Cupitt, David Saunders, and Kirk Martinez, "Digital Imaging in European Museums," Proceedings of SPIE vol. 3025, Very High Resolution and Quality Imaging II (1997): 144-151; Franziska Frey and Sabine Süsstrunk, "Image Quality Requirements for the Digitization of Photographic Collections," Proceedings of SPIE vol. 2663, Very High Resolution and Quality Imaging (1996): 2-7.

5. Andrew Rodney, "Correcting at the Scan Stage," PEI Magazine (Jan 1999): 18-26; "Product Watch: Colorful Scanning," Publish (Aug 1998).

6. "News Beat: Court Rules Umax Scanners a Bit Shy," Publish (March 1999).

7. A. Abrardo, et al., "Art-Works Colour Calibration by Using the VASARI Scanner," Proceedings of IS&T/SID Fourth Color Imaging Conference: Color Science, Systems, and Applications (1996): 94-97; K. Martinez, "High Quality Digital Imaging of Art in Europe," Proceedings of SPIE vol. 2663, Very High Resolution and Quality Imaging (1996): 69-75; Kirk Martinez, John Cupitt, and David Saunders, "High Resolution Colorimetric Imaging of Paintings," Proceedings of SPIE vol. 1901, Cameras, Scanners, and Image Acquisition Systems (1993): 25-36.

8. Y. Miyake et al., "Development of Multiband Color Imaging Systems for Recordings of Art Paintings," Proceedings of SPIE vol. 3648, IS&T/SPIE Conference on Color Imaging: Device-Independent Color, Color Hardcopy, and Graphic Arts IV, (1999): 218-225; Bernhard Hill, "Multispectral Color Technology: A Way toward High Definition Color Image Scanning and Encoding," Proceedings of SPIE vol. 3409, EUROPTO Conference on Electronic Imaging: Processing, Printing, and Publishing in Color, (1998): 2-13; Shoji Tominaga, "Spectral Imaging by a Multi-Channel Camera," Proceedings of SPIE vol. 3648, IS&T/SPIE Conference on Color Imaging: Device-Independent Color, Color Hardcopy, and Graphic Arts IV, (1999): 38-47; Roy S. Berns et al., "Multi-Spectral-Based Color Reproduction Research at the Munsell Color Science Laboratory," Proceedings of SPIE vol. 3409, EUROPTO Conference on Electronic Imaging: Processing, Printing, and Publishing in Color (1998): 14-25.

9. Hill, "Multispectral Color Technology," 11; Friedhelm König and Werner Praefcke, "The Practice of Multispectral Image Acquisition," Proceedings of SPIE vol. 3409, EUROPTO Conference on Electronic Imaging: Processing, Printing, and Publishing in Color, (1998): 34-41; Henri Maître et al., "Spectrometric Image Analysis of Fine Art Paintings," Proceedings of IS&T/SID Fourth Color Imaging Conference: Color Science, Systems, and Applications. (1996): 50-53; David Saunders and Anthony Hamber, "From Pigments to Pixels: Measurement and Display of the Colour Gamut of Paintings," Proceedings of SPIE vol. 1250, Perceiving, Measuring, and Using Color (1990): 90-102.

|

Highlighted Web Site

|

FAQs

Question:

My monitor offers a 32-bit color display setting. I am confused

about this option as 32 is not divisible by three. How does this work

in an RGB display that distributes the total number of bits among

red, green, and blue channels?

Answer:

We asked Don Brown, the author of this issue's Technical Feature,

to respond to the question:

CRT (cathode ray tube) monitors are analog devices. The computer's video board converts digital RGB code values to voltages controlling the red, green, and blue electron guns in the CRT, which in turn cause the appropriate phosphors on the screen to glow. The video board determines the color bit-depth of the display. It also relieves the computer's CPU and memory from the computational burden of constantly re-calculating and updating the value for each pixel on the screen. This is an especially complex task for 3D graphic support, usually requiring a graphic accelerator board.

Some video boards will give an option of 32-bit color. Don't be fooled. This simply means 24-bit color with an 8-bit alpha channel used to control the transparency of each pixel. The alpha channel is not about improving color precision. It is all about efficiency in rendering complex graphics involving transparency, blending, and overlays. This is a big concern in certain applications, the most demanding of which are computer games. Depending on the specific video/graphic board, more VRAM may be needed to support 32-bit display compared to 24-bit at the same screen resolution. If your only concern is high quality display of still images, choose 24-bit (or 32-bit if that is the only true color option) and buy yourself some higher screen resolution. Some boards move the data around in 32-bit blocks regardless, so there is no impact on VRAM.

Lower color bit-depths can also support an alpha channel. A 16-bit color setting allocates 5 bits for red and blue, and 6 for green (65,536 colors). A 15-bit color setting still uses 16 bits per pixel, however the allocation is 5 bits for red, green, and blue, and 1 for an alpha (overlay) channel. This cuts the number of displayable colors in half (32,768).

Calendar of Events

The

Library and Information Commission Call for Proposals

Submission Deadline: October 29, 1999

The Library and Information Commission of the United Kingdom is

offering funding for proposals on research into digital libraries.

Proposals should address a research question, such as user needs and

behavior or studies of how people use information in the digital

environment.

LITA National

Forum

November 5-7, 1999

To be held in Raleigh, North Carolina, the Library Information

& Technology Association (LITA) is sponsoring a preconference on

Metadata for Digital Libraries, and a subsequent forum that will

address topics including digital preservation and copyright law.

The Second Asian

Digital Libraries Conference

November 8-9, 1999

To be held at National Taiwan University in Taipei, Taiwan, the

Asian Digital Libraries Conference will bring together researchers

and developers from academia, industry, and government.

Going Digital

Seminar: Issues for Managers or Why Digitise?

November 16, 1999

To be held at the Exeter Central Library, Exeter, United Kingdom

this seminar will be for library managers who are contemplating

digitising their collections. There will be sessions on planning,

implementation, and maintenance.

Announcements

Digitisation:

How Much Does it Really Cost? Discussion Paper Now Available

Presented at the Digital Resources for the Humanities Conference

in September 1999 by Simon Tanner and Joanne Lomax Smith, this paper

looks at the factors which influence the cost of undertaking a

digitisation project.

The

Economics of Digital Access: The Early Canadiana Online Project

Report

This report examines the economics of digital, microfiche, and

print access for the Early Canadiana Online Project. Included are the

costs associated with archiving, access, and copying.

Scoping the

Future of the University of Oxford's Digital Library

Collections

Funded by the Andrew W. Mellon Foundation, this study of digital

library collections at the University of Oxford focuses on the

current strategy for digitization, analyses costs, and proposes the

establishment of the Oxford Digital Library Services.

Announcing

LOOKSEE: Resources for Image-Based Humanities Computing

LOOKSEE is intended as a community focal point for discussion and

development of next generation image-based humanities computing

projects. At present, LOOKSEE consists of Web materials and a

listserv discussion forum. The LOOKSEE Web site will expand to

include source code, demos, and documentation.

Documenting the

American South

The University of North Carolina at Chapel Hill has released a

Web site entitled Documenting the American South, a digitized and

encoded full-text collection of works on Southern history and

culture. Available are projects such as North American Slave

Narratives and The Southern Homefront, 1861-65.

Institute of Museum and Library

Services Announces 1999 Grant Recipients

Funding has been announced by the Institute of Museum and Library

Services for a number of projects that will focus on digitization.

They include: the University of Virginia Health Sciences Library's

project to digitize manuscript and photographic materials; the

University of Georgia's project to digitize documents from

collections on the local Native American population; and the Amistad

Research Center in New Orleans, La., which plans to digitize

documents about the Amistad incident.

Preservation Management of Digital Materials Study

The Arts and Humanities Data Service has been funded to study the

Preservation Management of Digital Materials. Based on the premise

that current and proposed digitisation programs would benefit

substantially from defining best practice and guidelines for digital

preservation, the development of such guidelines would assist in the

development of a range of preservation services. For further

information contact: Maggie Jones,

info@ahds.ac.uk.

Hotlinks Included in This Issue

Feature Articles

The

Cost of Digital Image Distribution: The Social and Economic

Implications of the Production, Distribution and Usage of Image

Data:

http://sunsite.berkeley.edu/Imaging/Databases/1998mellon/

Highlighted Web Sites

Kodak

Digital Learning Center: Color Theory:

http://www.kodak.com/US/en/digital/dlc/book3/chapter2/index.shtml

Calendar of Events

The

Library and Information Commission Call for Proposal:

http://www.lic.gov.uk/research/digital/digicall.html

LITA National

Forum: http://www.lita.org/forum99/index.htm

Going Digital

Seminar: Issues for Managers or Why Digitise?:

http://ahds.ac.uk/users/laswbprog.html

The Second Asian Digital

Libraries Conference: http://www.lis.ntu.edu.tw/adl99/

Announcements

Announcing

LOOKSEE: Resources for Image-Based Humanities Computing:

http://www.rch.uky.edu/~mgk/looksee/

Digitisation:

How Much Does it Really Cost? Discussion Paper Now Available:

http://heds.herts.ac.uk/HEDCinfo/Papers.html

Documenting the American

South: http://metalab.unc.edu/docsouth/

The Economics of

Digital Access: The Early Canadiana Online Project Draft Report:

http://www.albany.edu/~bk797/research.htm

Institute of Museum and Library

Services Announces 1999 Grant Recipients:

http://www.imls.gov/

Scoping the Future of

the University of Oxford's Digital Library Collections:

http://www.bodley.ox.ac.uk/scoping/

![]()

Publishing Information

RLG DigiNews (ISSN 1093-5371) is a newsletter conceived by the members of the Research Libraries Group's PRESERV community. Funded in part by the Council on Library and Information Resources (CLIR), it is available internationally via the RLG PRESERV Web site (http://www.rlg.org/preserv/). It will be published six times in 1999. Materials contained in RLG DigiNews are subject to copyright and other proprietary rights. Permission is hereby given for the material in RLG DigiNews to be used for research purposes or private study. RLG asks that you observe the following conditions: Please cite the individual author and RLG DigiNews (please cite URL of the article) when using the material; please contact Jennifer Hartzell at bl.jlh@rlg.org, RLG Corporate Communications, when citing RLG DigiNews.

Any use other than for research or private study of these materials requires prior written authorization from RLG, Inc. and/or the author of the article.

RLG DigiNews is produced for the Research Libraries Group, Inc. (RLG) by the staff of the Department of Preservation and Conservation, Cornell University Library. Co-Editors, Anne R. Kenney and Oya Y. Rieger; Production Editor, Barbara Berger; Associate Editor, Robin Dale (RLG); Technical Support, Allen Quirk.

All links in this issue were confirmed accurate as of October 12, 1999.

Please send your comments and questions to preservation@cornell.edu .

![]()