Table of Contents

Feature Article

Digitisation of Newspaper Clippings: The LAURIN Project

Günter Mühlberger, University of Innsbruck, Austria

guenter.muehlberger@uibk.ac.at

Introduction

To continue the tradition of collecting and providing access to

newspaper clippings in the new networked environment, the European

Commission embarked upon the LAURIN project in 1998. Seventeen

partners from seven European countries are co-operating in developing

a model that addresses several issues, including shared indexing,

shared and local access, new business models, and copyright. One of

the main outputs of the project is an image processing software that

replaces scissors, glue, and print files for maintaining newspaper

clipping collections. Other publicly available products include a

multilingual thesaurus and a prototype of a network of clipping

archives. A first release of the network will be available in Spring

2000.

Image Processing of Newspaper Articles

Within the scope of the LAURIN project, several special image

processing software and tools have been developed for the digital

reorganisation of the clipping activity. This article will highlight

the image processing software called libClip, which is based on

standard software available on the market. Software developers and

librarians have collaborated in the design and the customization of

the tool for local use. This cooperation has been very beneficial for

both sides. Librarians have been able to express their requirements

concerning the functionality, handling, design, and workflow of the

module. The developers have benefited from the expertise of

librarians concerning the capture of metadata and the design of the

module. libClip was released in its beta version in October 1999, and

will be available as a product in early 2000. Its technical

specifications, user-guidelines, and a full trial version can be

downloaded from the LAURIN

homepage.

News Clippings - Workflow

The electronic reorganisation of a clipping archive must provide

the same functions as an analogue archive. Articles have to be

selected, clipped, sometimes rearranged, filed, and distributed to

users as daily or weekly press reviews. The work of clippers and

archivists using the LAURIN workflow will eventually take the

following form:

Selection: Professional readers decide whether to select an article or not. They go through large numbers of newspapers and mark relevant articles. In many cases, the reader will do the final subject indexing of the articles as well.

Scanning: Selected articles are scanned. The essential data, such as the name of the newspaper, the edition, the page number, and the publication date, have to be recorded.

Clipping and Bibliographic Indexing: Articles are electronically clipped from the source page and are reconstructed on a standard page. Additional bibliographic information (e.g., title, subtitle, and author) is recorded at this point.

OCR Processing, OCR Correction, and Database Creation: These procedures are mainly done in the background as a batch job.

Final Indexing: The final indexing requires high level skills and includes content or subject indexing based on a controlled vocabulary.

Scanning

libClip supports a wide range of scanners and interfaces (SCSI,

TWAIN, KOFAX) and is able to process bitonal and greyscale images

within the 300-600 dpi range. Recently published newspapers are

collected and catalogued in several libraries. They are usually

microfilmed and are therefore easily accessible. If certain articles

are needed for special kinds of reuse (e.g., facsimile printing in a

book), the articles can be easily identified and reproduced from the

original. The recommended scanning resolution for recent bitonal

newspapers (even with limited colour) is 300-400 dpi, 1-bit, and

300-400, 8-bit (greyscale) for older newspapers. These resolution

levels ensure the creation of high-quality images to support

printing, display, and OCR processing.

Clipping and Indexing

The most interesting feature of libClip is its support of

clipping, rearranging, and semi-automatic indexing of articles. After

scanning the newspaper page, an automatic layout analysis is

conducted to recognize the standard elements of an article, such as

headlines, subtitles, leads, columns, pictures, captions, and author

names. In addition, the software also analyzes the order of these

standard elements. After the librarian has selected an article by

clicking on one of these elements, libClip displays the result of its

internal layout analysis. As shown in Figure 1, this is achieved by

using different colours for different elements. Articles with unusual

layouts may produce negative results; however, these can be manually

corrected.

|

|

|

After a librarian has confirmed the layout analysis, the OCR processing of the bibliographic parts of the article and the rearranging of the clipping on a standard page are accomplished simultaneously. libClip uses Xerox OCR (TextBridge) as its default OCR engine; however, other engines that better fit the specific needs of various newspaper archives can be used as well. The result of the OCR processing is usually satisfactory, at least in the case of headlines and subtitles. If the system suspects an error, a dialog window is presented in order to correct the text immediately. After the layout is confirmed, the second step involves the reassembling of the article on a standard page. In addition to the ability to paste the article in its original layout, different modes of rearranging the article are offered. Figure 2 shows a typical target page.

|

|

|

In order to save time, several jobs within the workflow are batch-oriented. For example, the OCR processing and the final data export are completely automated. The full text results of OCR processing is not delivered to the users, as the main purpose behind text conversion is to provide users with the full-text search option. Therefore, the text conversion does not target achieving high OCR accuracy but rather the creation of a file that is satisfactory for full-text searching.

Further Processing of the Metadata

For each article, three outputs are produced: The image(s) of the

clipping, the full text of the article, and the associated metadata.

The image and the full-text files are stored in a file system; the

metadata are maintained by an Oracle Database Management System

(DBMS). While scanning, clipping, and bibliographic indexing are

rather simple and automated, the final subject indexing of the

article according to an archive's internal guidelines has to be done

by specialists. This is why the last step of the acquisition workflow

is executed using a separate software tool.

Advanced Indexing

Advanced indexing is indispensable for the future of clipping

archives - and it will be one of the distinctive differences between

full-text newspaper databases and clipping archives. Barbara P.

Semonche writes: "Some additional intellectual effort and news

article analysis are necessary to link appropriately all the myriad,

fleeting and unstated associations among a never-ending stream of

stories about people, events and institutions."

(1) The intellectual input must be as high as

possible but at the same time the activity must be as efficient and

automated as possible. A main prerequisite of advanced indexing is a

controlled vocabulary that meets the special requirements of their

collection. In the case of LAURIN, we were faced with the fact that

the initiative involves archives from all over Europe printed in six

different languages. The proposed solution is a complex system where

main functions are central, such as the maintenance of the

vocabulary, including its default structure, thesaurus relations, and

thesaurus categories. Other functions such as the maintenance of a

free text vocabulary is accomplished locally by the LAURIN

participants. (2)

The project team identified three more features that are used by clipping archives:

The indexing interface reflects these considerations and offers all functions already described above. The indexer gets the image of the article, the full text, and the already processed metadata. The indexer then decides whether to apply the optional functions of the advanced indexing process, such as normalising authors or writing an abstract of the article, or concentrate directly on the mandatory functions: The text-type classification is done by a short cut, accessing the thesaurus entries is done either by searching or browsing. The hierarchical structure and additional information is shown to the indexer, who confirms the selected subject headings for the articles.

Future Efforts

Colour Processing

One of the next steps will be to update libClip for processing colour

pages, which entail their own set of problems. These images are

usually quite large, and therefore their processing requires high

performance systems that can handle complex layout analysis. At this

point, the OCR results from colour documents are not satisfactory

yet.

Retro-Digitisation of Clipping Collections

Internet-based newspaper clipping archives are valued by a large

group of users, including historians, journalists, as well as average

citizens. The image of the article guarantees a level of authenticity

which is not reached by the pure full text. Many users are interested

in the added value of the image and will therefore not want to do

without it. Apart from the cultural value of newspaper clippings,

they are for many other reasons good candidates for

retro-digitisation for the following reasons:

In principle, libClip is already able to process old clippings, but the predefined workflow focuses on the daily acquisition of current newspapers. In order to cope with the challenge of digitising millions of backfile clippings, separate workflow models have to be developed. Furthermore, the different methods used in the past for the filing of clippings, such as keeping the original cutting in an envelope, gluing the cuttings into books, or microfilming the articles, have to be taken into account The retro-digitisation of newspaper clippings will be a major work area and a follow up of LAURIN which will be proposed to the Commission of the European Community during the year 2000.

Other Application Areas

The most promising feature of libClip is related to the

connection of the automatic layout analysis and the semi-automatic

creation of metadata as can be seen in Figure 3.

|

|

|

There are other library and archival applications where this feature might be of further interest. As an example, we might think of the structural layout of an old book. A title page or a contents page of a book of the 19th century is structured in a common way. Title, subtitle, author, publisher, and publication date are such typical elements. The chances to recognise them correctly in an automatic process are therefore quite high. Other structural elements, such as headlines of sessions, footnotes, graphs, prose vs. poetic text passages, direct speech, letters, and citations, can also be considered. Once these elements are recognised, the information can be used to mark up the raw OCR text automatically according to XML or TEI specifications. The same idea can be applied to the complete scanning of old newspapers. Interesting features such as browsing the list of articles of a certain issue would be possible without any additional effort.

(1) Semonche, Barbara P. "Newspaper Indexing Policies and Procedures." In News Media Libraries: A Management Handbook. Ed. By Barbara P. Semonche. Westport, CT: Greenwood Press, 1993.

(2) A detailed description of the LAURIN thesaurus structure will be available on the LAURIN homepage in early 2000.

(3) The classification system is built on the basis of the guidelines of IPTC (International Press Telecommunications Council; http://www.iptc.org/iptc/) and of HWWA (Institut für Wirtschaftsforschung - Hamburg). The main types are news, opinion, review, verbatim, biographic and commercial (advertising) text. Currently there are more than 30 subtypes.

Technical Feature

The Digital Atheneum - Restoring Damaged Manuscripts

W. Brent Seales, Department of Computer Science, University of

Kentucky

seales@dcs.uky.edu

James Griffioen, Department of Computer Science, University of

Kentucky

griff@dcs.uky.edu

and

Kevin Kiernan, English Department, University of Kentucky

kiernan@pop.uky.edu

Introduction

Historical disasters such as fires, floods, mildew, bookworms,

bookbinders, zealots, reformers, Vikings, censors, acidic paper, and

modern restorations, have deprived us of many cultural treasures. The

residue of these disasters are nonetheless carefully preserved by

repositories throughout the world, even when they are seemingly

unusable because of their fragile or illegible state. The

Digital

Atheneum Project (1) is developing new

methods to restore and make accessible previously lost writings. Our

work focuses on recovering

manuscripts from the famous

Cottonian Library collection in the British

Library.

Many of the Cotton Library manuscripts were badly damaged by a 1731 fire, and its more disastrous aftereffects, including the water used to put it out. The manuscripts further deteriorated over the next one hundred years as a result of neglect, misuse, and various methods of conservation. In the mid-nineteenth century, a comprehensive effort to restore the manuscripts by inlaying each damaged vellum leaf in separate paper frames, and then rebinding these frames in book-form, led to other forms of damage and illegibility. For example, some of the frames were made of acidic paper, which is now discoloring and obscuring the text it was meant to preserve; and all of the paper frames cover parts of text along the damaged edges.

Even with the best digitization techniques, the remaining text of some severely burned manuscripts is difficult or impossible to decipher. Often only parts of letters survive, distorted beyond recognition. Creating a digital library of such images that users can search for specific letters or phrases is a daunting task. There are no exact matches even with clear handwriting, but with damaged manuscripts a search engine must be devised to accommodate partial and distorted forms, jagged edges, and charring that might be mistaken for penstrokes.

The focus of this project is on extracting previously hidden text from such badly damaged manuscripts. The computer science research involves the development of novel approaches to three technically challenging problems: (1) new digitization techniques to illuminate all possible information from the original manuscript; (2) restoration algorithms to attempt to fill in the most likely missing information; and (3) complex, data-specific, content search techniques to identify the imperfect representations found in severely damaged manuscripts.

Using Illumination to Enhance Digitization

The initial step in creating a digital library of damaged

manuscripts is to obtain exceptionally high-quality digital images of

the manuscripts. When analyzing and reconstructing damaged

manuscripts, minute details are crucial. The images must be obtained

using extremely high-resolution cameras. Many parts of the damaged

documents are invisible without special lighting techniques. The

manuscripts are, moreover, rarely completely flat or planar. Even

small contours in a document may change the appearance of the

vestiges of text when digitized with a two-dimensional (2-D) camera.

To analyze or search the resulting document, it is important to start

with images that convey extremely accurate data in a geometric

layout as close to the original as possible.

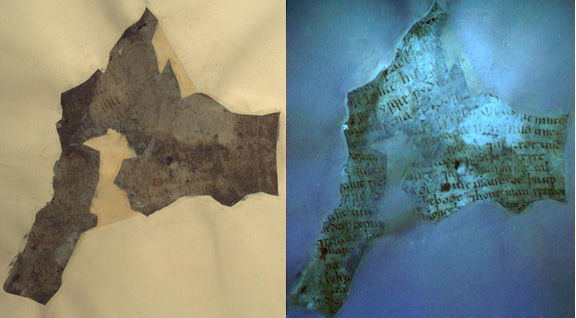

To illuminate information otherwise hidden to both camera and naked eye, we employ fiber-optic lighting techniques. The page is secured vertically with clamps and the digital camera is set on a tripod facing it. Fiber-optic light (a cold, bright light source) behind the paper frame reveals the covered letters, and the camera digitizes them. A related method based on ultraviolet (UV) lighting is particularly useful for recovering erased or faded text. Conventional ultraviolet photography requires long exposure times and is prohibitively expensive, time-consuming, and destructive. However, we have found that a digital camera can quickly capture the effects caused by ultraviolet light at the scan rate of the digital camera without the need for long exposures. Contrast enhancement techniques then produce images that often clearly reveal formerly invisible text. Many of the manuscripts obscured by fire-damage, in particular, benefit from UV. Figure 1 illustrates the technique, showing a document as viewed by the human eye on the left and the same fragment enhanced by UV on the right.

|

and with hidden marking revealed by UV digitization (right) |

|

To capture the contours that are present in the document we are exploring a technique that uses a single camera in conjunction with a white light source. The position of the camera and the light source are known and calibrated, allowing us to obtain depth information from the document. The details of the depth extraction mechanism are described in section Enhancements from 3-D Representations. The depth information allows us to determine how the surface warped or wrinkled from extreme heat and the effects the deformation has had on the text.

Content-Based Searching

Because damaged data must be processed before it can be

effectively searched, the selection of image processing techniques

used to enhance, restore, or model the objects in the image is a

critical step toward content-based searching. To allow editors to

control the way in which processing is applied, we are developing a

toolset that editors can use to create data-specific content-based

retrieval systems. The toolset will allow editors to tailor the

processing to bring out the desired features of the data. The toolset

itself is designed using a new semantic object-oriented

processing language and interface called

MOODS. MOODS

objects extend the abstraction of conventional objects to include the

concept of data semantics, which record what is known about

the data. The data semantics both guide the construction of

processing sequences and are used by the content-based search engine.

Although a wide range of processing techniques could be of use to editors, we are currently working on two techniques of special value to those working with severely damaged manuscripts. One technique is designed to aid in the linking of transcriptions to the original manuscript; the other is meant to aid in the analysis of damaged or warped letter forms. The two processing operations are related in the sense that they require similar functionality. The basic idea behind both techniques is to identify the location of a letterform in the manuscript and then classify it, which is often fragmentary or otherwise deformed. The letterforms typically can be found by identifying the "lines" and/or spacing between lines. Combining a transcription with the output from the line-analysis allows us not only to identify more accurately letterforms but also to link automatically the transcription to the original document for future searching. In some cases the transcription may be incomplete or inaccurate because the letterforms were so badly damaged. We are also exploring methods to allow an editor to create a model of all possible letterforms, regardless of scale and rotation. Using the model and statistical pattern matching techniques, the system would attempt to classify otherwise hard to label letterforms, helping with expert intervention to fill in missing parts of the transcription, or to correct errors and omissions in the transcription.

Reconstructing Damaged Content

A central part of this work is to digitally enhance and recover

the content of damaged manuscripts in the collection. We have chosen

to develop new algorithms for digital enhancements that include a

measure of the surface shape of a manuscript. Since

manuscript pages of vellum rarely lie flat and in extreme cases are

severely distorted, we are developing new digitization methods to

recover the shape of manuscript pages, while registering these shapes

very precisely along with the more traditional digital color

information. Obtaining the depth dimension creates several

possibilities for enhancement algorithms that are difficult or

impossible without it.

Obtaining Manuscript Shape

We are also developing a low-light, relatively fast and accurate

digitization system for obtaining the surface shape of manuscript

pages. Our system uses a technology called structured light,

and operates with standard digital cameras and a low-impact

controlled light source such as a VGA computer-controlled projector.

There are several advantages of such a system over a laser-based

depth recovery system. First, ordinary projectors are less bright and

therefore less destructive than even standard lights used for

illumination, and are considerably less invasive than most laser

systems. Second, the depth values recovered with such a projection

system are immediately and accurately registered with the color

imagery of the manuscript, which is not the case in laser-based

systems. Third, the system is designed to scale, incorporating

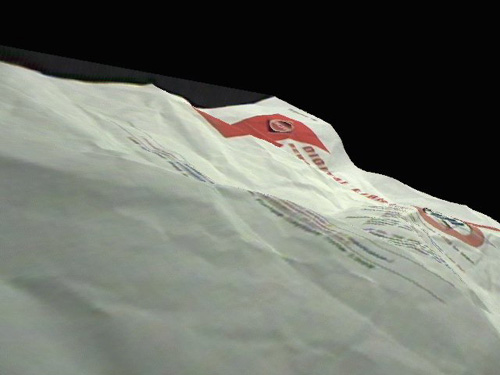

information from as many cameras as are desired or available. Figure

2 shows an example of the reconstruction of a wrinkled poster using

our depth recovery system.

|

registered depth measurements and color for a wrinkled poster |

|

Enhancements from 3-D Representations

We believe that the visualization of a manuscript, seal, or other

artifact can be greatly enhanced by providing an accurate

characterization of the 3-D shape of the item. Our efforts include

algorithms that will allow 3-D representations to be manipulated and

visualized, turned on or off, and developed over time from the

recovered shape into other potential surface shapes. Figures 3a and

3b show a reconstruction of a cast tablet. Figure 4 illustrates the

kind of wax seals we expect to digitize as part of the testing of the

depth recovery system.

|

| |

|

a. This image of a cast of an aspirin

demonstrates

|

|

b. This image shows only the underlying

|

|

This image from a high-resolution scan of a wax seal illustrates the kind of artifact (in addition to manuscripts) that will be enhanced with depth information recovered with our scanning system. |

|

3-D Mosaicing

Depth information can help solve the problem of accurately

reuniting physically separate fragments. In particular, depth values

aid the process of bending different parts of a surface back to a

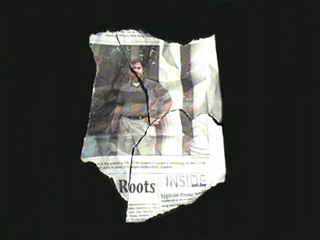

common plane. The problem is illustrated by Fig 5, which shows an

example with 3 fragments that cannot be digitally fit together

without depth enhancements. Using depth, we hope to create

transformations that seamlessly fit fragments together, providing a

new digital mechanism for enhancing manuscript pieces that have been

torn apart.

|

| |

|

a. This example shows physically disjointed |

|

b. This side view of the depth variation |

|

c. This attempt at mosaicing does not use the |

Conclusion

The goal of our research in computer science is to preserve and

restore badly damaged manuscripts using custom lighting and new

digital restoration techniques. By putting advanced and focused

computer tools for restoration and manipulation into the hands of

expert editors, we hope to facilitate access to high quality

digitally restored manuscripts and to the lost treasures they still

preserve.

1. The Digital Atheneum - Restoring Damaged Manuscripts, effort sponsored in part by the National Science Foundation under agreement number IIS-9817483. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of NSF or the U.S. Government.

Conference Report

Digital Preservation: A Report From the Roundtable Held in Munich, 10-11 October 1999

Neil Beagrie, Arts and Humanities Data Service,

neil.beagrie@ahds.ac.uk

and

Nancy Elkington, Research Libraries Group,

nancy.elkington@notes.rlg.org

Introduction

The long-term preservation of digital materials is an issue of

increasing concern to libraries and archives around the world.

Recognising that there is much to be gained from sharing existing

knowledge and experience internationally, the Bayerische

Staatsbibliothek (Bavarian State Library - BSB) and Die Deutsche

Bibliothek (the German National Library - DDB) recently drew

representatives from German archives and libraries together with

selected speakers from other countries. Hosted by Dr. Hermann

Leskien, Director of the BSB, the meeting provided both a summary of

current research and programmes for digital preservation and an

overview of the situation within Germany itself.

The Situation within Germany

There is strong de-centralisation within Germany with individual

states and municipal authorities exercising considerable autonomy.

DDB is legally responsible for preserving all print material

published in Germany, and the current national deposit law extends

the DDB remit to stand-alone digital publications but not those

published on-line. Representatives from the DDB and five publishers

are currently drafting new German legal deposit legislation to

include all electronic materials. They are discussing issues such as

licensing and access and hoping to obtain the support of other German

publishers and libraries. Because each state within Germany has its

own legal deposit law and library, there is no consensus on who has

ultimate responsibility for preservation, or the need to duplicate or

coordinate holdings between the state and national libraries.

A similar situation exists in the archive sector with the important exception that the Federal Archives Law already includes digital records. Although there is no German National Sound Archive, radio and television broadcasters have formed the Deutsches Rundfunk Archiv (DRA) to safeguard audio-visual materials that increasingly are produced in digital form.

Deutsche Forschungs Gemeinshaft (DFG), the national research funding agency, has assumed an important role in preserving non-German publications by funding a number of national specialist libraries and developing virtual subject libraries for electronic publications and online gateways in areas such as history and psychology. The DFG is also funding two national digitisation centres and a number of research projects into digital preservation, including DDB's participation in NEDLIB (Networked European Deposit Library) and the efforts at the BSB. Funding to support other international projects is under development.

Current Technical Approaches

Presentations covered three digital preservation strategies:

emulation, migration, and digital salvage. Jeff Rothenberg of Rand

Corporation outlined the arguments he has advanced for a greater role

for

emulation

in the long-term preservation of digital publications. He

concluded with a call for greater research into encapsulation

techniques, including specifications for building future emulators,

and feasibility tests of emulation for long-term preservation. In a

later session Hans Jensen from the Koninklijke Bibliotheek (National

Library in the Netherlands - KB) outlined the emulation test-bed

project they have commissioned as part of NEDLIB, details of which

will shortly appear on the

NEDLIB Website.

Werner Bauer compared two major systems migrations at the Leibnitz Research Centre. The first involved transferring one terabyte of data from highly proprietary software and took six months to accomplish. The second major migration, which occurred two years ago, proceeded more smoothly, with 10 terabytes converted in just four weeks.

The discussion of these presentations was particularly valuable as it drew on practical experience. One participant noted the potential limitations of emulation as a preservation technique for items that need to be interoperable and cross-searched. Overall, a consensus seemed to emerge that both emulation and migration have strengths and weaknesses, and both should have a role in long-term preservation.

Michael Wettengel of the Bundesarchiv (German National Archive) offered the final presentation, on rescuing obsolete data. After re-unification, it was discovered that the digital records for many East German institutions were stored in obsolete formats with little or no documentation. Recovery efforts underscored emphatically the critical importance of documentation and how short-circuiting this task has significant and detrimental effects for long-term preservation.

International Initiatives

Other presenters covered research and activity in North America

(Nancy Elkington), the UK (Neil Beagrie), and the Netherlands and

Europe (Hans Jensen).

Hans Jensen related the development of the electronic depository system at the KB. The library currently contains about 300,000 electronic articles from 700 titles occupying some 200 Gb of storage. There is no legal deposit law for electronic titles in the Netherlands but the KB has good coverage through voluntary agreements with publishers. There are agreements with the Dutch government for their publications, and with two international publishers Elsevier and Kluwer (both based in the Netherlands). The library has issued a request for proposals for the new electronic repository system with a decision expected in March 2000. Initial costs are projected to be in the order of three to four million Euros.

The KB is one of eight national libraries involved in the NEDLIB project. This group is defining a high-level architecture for deposit libraries' digital systems. NEDLIB is one of the growing number of digital library projects seeking to implement the draft ISO Open Archival Information System (OAIS) standard that emerged from the space and earth observation communities. Others include CEDARS in the UK and PANDORA in Australia.

The authors covered more familiar ground for RLG DigiNews readers, reporting on initiatives such as the Arts and Humanities Data Service (AHDS) and CEDARS in the UK, the past RLG and CPA Task Force on the Archiving of Digital Information and the more recent report and survey of digital preservation needs in RLG member institutions. They also reported significant new initiatives on both sides of the Atlantic. These included proposals to establish a UK Digital Preservation Coalition to coordinate collaboration and digital preservation activity between sectors in the UK and with international partners, collaboration between RLG and the US Digital Library Federation as well as key international partners (CEDARS, AHDS, BSB, and the National Library of Australia, among others); and new projects to explore emulation (CEDARS 2 - CEDARS with the University of Michigan in an NSF/JISC initiative) and to develop tools in support of managerial aspects of digital preservation (AHDS).

Conclusion

As the meeting drew to a close, a key issue for all participants

was what should come next. The German participants agreed to form a

small working group on digital preservation, with representation from

the national library, the university and state libraries, the DFG,

and computing professionals. Dr. Leskien acknowledged that, as a

member of RLG, he would look to that relationship to help foster

greater international collaboration between the German group and

partners elsewhere. Other common desires were to begin to transform

projects into long-term programmes, to concentrate on developing

effective preservation techniques, and - whatever the commercial

interests - not to lose sight of providing long-term access as well

as long-term preservation. In future years it seems likely that the

scale of German activity in digital preservation and international

collaboration will increase substantially.

|

Highlighted Web Site Open eBook

Initiative |

FAQs

Now that large (15-18") color flat-panel displays are becoming more affordable, should I be considering buying one for my digital imaging project? Also, are there any other promising new developments in display technology that I should be tracking?

This issue's FAQ is answered by Rich Entlich, Digital Projects Researcher at Cornell University Library, Department of Preservation and Conservation.

Improvements in the affordability and performance of computer monitors have lagged far behind that of other digital imaging components. Vacuum tubes disappeared from nearly all other electronic devices long ago, yet CRT (cathode ray tube) based monitors still account for over 90% of all computer displays in use today. The LCD (liquid crystal display), the most serious challenger to the CRT, has been under development for nearly 30 years. Yet, despite improvements in manufacturing that have brought down the cost of LCDs and allowed them to be produced in larger sizes, a 15" color LCD monitor will still set you back 3-4 times as much as a CRT display with comparable viewing area and higher resolution.

Nevertheless, plunking down the extra cash may make sense for certain digital imaging applications or environments. The best way to decide may be to consider the strengths and weaknesses of each technology. All LCDs are thinner, lighter, less power-hungry, and cooler running than CRTs as well as being free of potentially dangerous low frequency emissions. The best of the current crop of color LCD monitors also boast higher brightness and better contrast ratios than CRTs, and the complete absence of several common CRT problems, including misconvergence, poor focus, and nonlinearity (e.g. pincushioning). The sharp, bright, flicker free image (which reduces eyestrain and fatigue), along with reduced emission of heat and electromagnetic radiation, make top quality LCD monitors a good choice for long sessions of QC work, or for any work that requires a great deal of time in front of a monitor.

The down side of LCDs, in addition to their high cost, includes:

Before taking the LCD plunge, be careful, since the quality of LCD monitors varies greatly and price is not always the best indicator. Look for an active-matrix or TFT (thin film transistor) LCD monitor. Other LCD technologies, such as dual-scan and STN (super twisted nematic) are less expensive, but produce inferior images. Also, look for one with a digital interface card. This adds slightly to the cost, and precludes attachment of a CRT, but eliminates the image degradation associated with analog to digital conversion. If at all possible, check out the monitor in person, or at least study product reviews before purchasing.

Just as LCDs are finally reaching the technological maturity to compete with CRTs, a dazzling array of new flat panel (1) technologies are appearing on the horizon that may challenge LCDs as a successor to the CRT. Table 1 provides a summary of some technologies that might appear in desktop monitors within the next five years. Other flat panel technologies (e.g., ferroelectric LCD, plasma, plasma-addressed LCD, digital micromirror devices) are currently not slated for desktop monitors due to various limitations in function or manufacture, but may appear in either very small (viewfinders, projection devices) or very large (HDTV screens, advertising displays) applications.

|

Table 1: Emerging Display Technologies | ||||

|---|---|---|---|---|

|

Name |

Characteristics |

Applications |

Major Backers |

Availability |

|

Pros: Thin, lightweight, durable, low power consumption. bright image, wide viewing angle Cons: As expensive as LCD |

Numerous, including many current CRT and LCD uses |

Motorola |

1-2 years | |

|

Similar to FED |

Similar to FED |

Candescent, |

4-5" units now in prototype | |

|

Pros: Very thin, flexible, fast response time, wide viewing angle, very high resolution, low power consumption Cons: Still experimental, limited color range |

Initially, alphanumeric readouts. Later TV and computer displays |

Cambridge Display Technology, Philips, Seiko-Epson, H-P |

5 years | |

|

Pros: High resolution (200 dpi) Cons: High power consumption, high price |

Medical imaging, architectural drawings, digital libraries and archives |

IBM |

1-2 years | |

|

Pros: Extremely thin and flexible, non-volatile image, very high resolution, inexpensive Cons: Monochromatic |

Electronic books and newspapers, portable scanners, portable displays, very large displays, and fold-up displays |

Xerox |

2 years or more | |

|

Similar to Gyricon |

Initially, price tags, point-of-sale signs, traffic warning signs. Later: Electronic books and other flexible displays, up to very large sizes |

E Ink, |

0-5 years or more | |

General Information on CRTs and LCDs:

The PC

Technology Guide, CRT Monitor

The PC

Technology Guide, Panel Displays

LCD Monitor Reviews:

ZD

Net PC Magazine, "Flat Panels: Worth the Price?"

PC

World Online, "LCD Monitors: Light, Slight, and Stylish"

1. Note that "flat panel" is not the same as "flat screen." "Flat screen" usually refers to CRT monitors that have a flat face, helpful in reducing distortion and glare.

Calendar of Events

Digital

Documents Track at the Hawaii International Conference on System

Sciences

January 4-7, 2000

Maui, Hawaii is the location for this session on digital document

understanding and visualization. Topics addressed include research on

how organizations and individual users seek to understand and

navigate through information retrieval.

Interdisciplinary

Conference on the Impact of Technological Change on the Creation,

Dissemination, and Protection of Intellectual Property

February 10-12, 2000

The Ohio State University College of Law, Columbus Ohio will

sponsor this multi-disciplinary conference on intellectual property

and digital communications.

VALA

Biennial Conference and Exhibition

February 16-18, 2000

The Victorian Association for Library Automation (VALA) is sponsoring

this conference that will be held in Melbourne, Australia. The forum

will focus on technologies for the hybrid library, including

electronic and digital management of information. Topics include

metadata, mark-up languages: SGML, XML, RDF, digital publishing, and

archiving electronic resources.

The Humanities

Advanced Technology and Information Institute: Digitization for

Cultural Heritage Professionals

March 5-10, 2000

After offering this course for the past two years in Glasgow,

this workshop will be held in Houston, Texas. Participants in the

course will examine the advantages of developing digital collections

of heritage materials, as well as investigate issues involved in

creating, curating, and managing access to such collections. The

lectures will be supplemented by seminars and practical exercises.

Announcements

National Library of

Australia: Preserving Access to Digital Information (PADI)

Website

The National Library of Australia has redeveloped this site into

a comprehensive subject gateway with search capabilities and

maintenance processes that will help the Library keep it up-to-date.

A new discussion list, padiforum-l, has been set up for the exchange

of news and ideas about digital preservation issues.

Digital

Imaging for Photographic Collections

Franziska S. Frey and James M. Reilly's Digital Imaging for

Photographic Collections provides guidelines for cultural

institutions that are converting their photographic collections to

digital form. Funded by the National Endowment for the Humanities

this publication is available in print or electronically from the

Image Permanence Institute.

Ad*Access

The Rare Book, Manuscript, and Special Collections Library at

Duke University has made available on a Website named Ad*Access, an

online image database of over 7,000 advertisements printed in U.S.

newspapers and magazines between 1911 and 1955. Examples are selected

from five major categories that have significant research interest:

Beauty and Hygiene; Radio; Television; Transportation; and World War

II.

The

Hoagy Carmichael Collection

The Indiana University Digital Library Program and Archives of

Traditional Music has released the Hoagy Carmichael Collection on the

Web. It includes selections of Carmichael's music, images of

manuscript and holograph music, letters, photographs, and lyric

sheets. The Web site features a virtual tour of the Hoagy Carmichael

Room at the Archives of Traditional Music, as well as more in-depth

information for scholars, including complete finding aids.

Historic

Pittsburgh Digital Collection

The Digital Research Library, a division of the University

Library System, University of Pittsburgh, announces the availability

of the Historic Pittsburgh digital collection. Digital editions of

sixty-one volumes on the history of Western Pennsylvania are

currently available and fully searchable. In addition, 19th and early

20th century real estate plat maps originally published by the G.M.

Hopkins Company have been converted to digital images

Performing

Arts Data Service Report

The Performing Arts Data Service (PADS) has issued a report that

focuses on the dissemination of moving image resources over computer

networks to users in United Kingdom Higher Education. The project,

known as the BFI/BUFVC/JISC Imagination/Universities Networked Moving

Images Pilot Project, was initiated jointly by the British Film

Institute (BFI), the British Universities Film and Video Council

(BUFVC) and the Joint Information Systems Committee (JISC) of the UK

Higher Education Funding Councils.

RLG News

Moving Theory Into Practice to be Available in Early 2000

Written by Anne R. Kenney and Oya Y. Rieger, Cornell University, this new publication from RLG is a self-help reference for libraries and archives that choose to retrospectively convert cultural resources to digital form. Moving Theory into Practice advocates an integrated approach to digital imaging programs, from selection to access to preservation, with a heavy emphasis on the intersection of institutional, cultural objectives and practical digital appllications. As its title suggests, the aim of this book is to translate theory into practice, offering guidance and recommended practices in a host of areas associated with digital imaging programs in cultural institutions.

The new publication focuses on an interdependent circle of considerations associated with digital imaging programs in cultural institutions. The chapters have been written to foster critical thinking in a technological realm, to support informed decision making and to assist program managers, librarians, archivists, curators, systems staff, and others with the range of issues that must be considered in implementing and sustaining digital imaging initiatives.

Complementing the book's nine chapters, some thirty sidebars highlight major issues, point out pertinent research trends, and identify relevant emerging technologies and techniques. Utilizing this approach, the book offers the intellectual contributions of more than 50 outstanding professionals with special knowledge, experience, and insights.Further details, including the table of contents, publication information, and options for advance ordering, can be found at the new Moving Theory Into Practice web site.

Hotlinks Included in This Issue

Feature Article

The LAURIN Project:

http://laurin.uibk.ac.at/

Technical Feature

Cottonian Library collection:

http://www.bl.uk/diglib/magna-carta/magna-carta.html

Digital

Atheneum Project: http://www.digitalatheneum.org

manuscripts:

http://www.uky.edu/~kiernan/OBx/X42-57v.html

Conference Report

Arts and Humanities Data Service

(AHDS): http://ahds.ac.uk/

CEDARS:

http://www.leeds.ac.uk/cedars/

CEDARS

2: http://www.leeds.ac.uk/cedars/cedars2/index.htm

Emulation

in the Long-Term Preservation of Digital Publications:

http://www.clir.org/pubs/reports/rothenberg/contents.html

Networked European Deposit

Library (NEDLIB): http://www.konbib.nl/nedlib/

Open Archival

Information System (OAIS):

http://www.ccsds.org/RP9905/RP9905.html

Report and Survey

of Digital Preservation Needs:

http://www.rlg.ac.uk/preserv/digpres.html

RLG and CPA Task Force on the

Archiving of Digital Information:

http://www.rlg.ac.uk/ArchTF/

Tools (AHDS):

http://ahds.ac.uk/manage/licstudy.html

Highlighted Web Site

Open eBook Initiative:

http://www.openebook.org/

FAQs

E

Ink, Electronic Ink:

http://www.lems.brown.edu/vision/people/leymarie/Busy/Akebia/Eink/QandAGeneral.html

Field

Emission Display or FED:

http://www.mot.com/ies/flatpanel/technology.html

Gyricon,

Electronic Paper:

http://www.parc.xerox.com/dhl/projects/epaper/

Light Emitting

Polymers or LEP: http://www.cdtltd.co.uk/features.htm

The PC

Technology Guide, CRT Monitor:

http://www.pctechguide.com/06crtmon.htm

The PC

Technology Guide, Panel Displays:

http://www.pctechguide.com/07panels.htm

PC

World Online, "LCD Monitors: Light, Slight, and Stylish":

http://www.pcworld.com/top400/article/0,1361,11534,00.html

Roentgen:

http://www.research.ibm.com/news/detail/factsheet200.html

ThinCRT™:

http://www.candescent.com/Candescent/showcase.htm

ZD

Net PC Magazine, "Flat Panels: Worth the Price?":

http://www.zdnet.com/pcmag/stories/reviews/0,6755,2235569,00.html

Calendar of Events

Digital

Documents Track at the Hawaii International Conference on System

Sciences: http://www.hicss.hawaii.edu/HICSS_33/ddcfp.htm

The Humanities Advanced

Technology and Information Institute: Digitization for Cultural

Heritage Professionals: http://www.rice.edu/Fondren/DCHP00/

Interdisciplinary

Conference on the Impact of Technological Change on the Creation,

Dissemination, and Protection of Intellectual Property:

http://www.osu.edu/units/law/intellectualproperty.htm

VALA Biennial

Conference and Exhibition:

http://www.vicnet.net.au/~vala/conf2000.htm

Announcements

Ad*Access:

http://scriptorium.lib.duke.edu/adaccess/

Digital

Imaging for Photographic Collections:

http://www.rit.edu/~661www1/sub_pages/frameset2.html

Historic

Pittsburgh Digital Collection:

http://digital.library.pitt.edu/pittsburgh/

The Hoagy

Carmichael Collection:

http://www.dlib.indiana.edu/collections/hoagy/

National Library of Australia:

Preserving Access to Digital Information (PADI) Website:

http://www.nla.gov.au/padi/

Performing

Arts Data Service Report:

http://www.pads.ahds.ac.uk/ImaginationPilotProjectCollection

RLG News

Moving Theory Into Practice:

http://www.rlg.org/preserv/mtip2000.html

![]()

Publishing Information

RLG DigiNews (ISSN 1093-5371) is a newsletter conceived by the members of the Research Libraries Group's PRESERV community. Funded in part by the Council on Library and Information Resources (CLIR), it is available internationally via the RLG PRESERV Web site (http://www.rlg.org/preserv/). It will be published six times in 1999. Materials contained in RLG DigiNews are subject to copyright and other proprietary rights. Permission is hereby given for the material in RLG DigiNews to be used for research purposes or private study. RLG asks that you observe the following conditions: Please cite the individual author and RLG DigiNews (please cite URL of the article) when using the material; please contact Jennifer Hartzell at Jennifer.Hartzell@notes.rlg.org, RLG Corporate Communications, when citing RLG DigiNews.

Any use other than for research or private study of these materials requires prior written authorization from RLG, Inc. and/or the author of the article.

RLG DigiNews is produced for the Research Libraries Group, Inc. (RLG) by the staff of the Department of Preservation and Conservation, Cornell University Library. Co-Editors, Anne R. Kenney and Oya Y. Rieger; Production Editor, Barbara Berger Eden; Associate Editor, Robin Dale (RLG); Technical Support, Allen Quirk.

All links in this issue were confirmed accurate as of December 13, 1999.

Please send your comments and questions to preservation@cornell.edu.

![]()