Table of Contents

- Editors' Interview

The National Library of Australia's Digital Preservation Agenda, an Interview with Colin Webb

- Technical Feature

Archiving and Preserving PDF Files, by John Mark Ockerbloom

- Highlighted Web Site - Project NEDLIB (Networked European Deposit Library)

- FAQ - Recordkeeping Metadata Projects

- Calendar of Events

- Announcements

- RLG News

- Hotlinks Included in This Issue

![]() The National

Library of Australia's Digital Preservation Agenda, an Interview with Colin

Webb

The National

Library of Australia's Digital Preservation Agenda, an Interview with Colin

Webb

cwebb@nla.gov.au

We are pleased to present you the second Editors' Interview, a new occasional feature to RLG DigiNews that we introduced in August 2000. Our interviewee is Colin Webb, Director of Preservation, from the National Library of Australia. Webb has sought to bring a strong preservation perspective to the National Library's initiatives and even created the first—and still the only—specialist position in digital preservation in Australia.

Anne R. Kenney and Oya Y. Rieger, Co-Editors

Recognizing that digital resources are a significant part of tomorrow's heritage, the National Library of Australia (NLA) has pioneered in the development of a national digital preservation policy. What are the characteristics of the information environment that has allowed such a coordinated effort in Australia?

Many things have made this possible. Of course, the technology that supports remote access also makes it possible to operate distributed archives, and even if it turns out to be more cost effective to centralise digital archives, as noted by Kevin Guthrie of JSTOR in a previous RLG DigiNews Editors' Interview, there will be stakeholders with an interest in approaching this collaboratively.

On the other hand, it's not surprising that it's been easier to envisage a national program in Australia than it might be in some other places. The library community here has a long history of cooperation on many fronts including a national bibliographic database, resource sharing, and preservation. This history, in turn, reflects a number of influences, including distance and the risk of potential isolation; the need to make the best use of adequate but constrained resources to serve a community with high expectations; a natural relationship between government-owned libraries at the federal, state and territory levels; and the influence of generations of individual librarians who could see that we had more to gain by working together than by working alone.

Of course, cooperation doesn't emerge from itself or sustain itself. The National Library's leadership role in the Australian library system specifically includes fostering and enabling cooperation, and it has worked very successfully towards this with a number of networks including the Council of Australian State Libraries (CASL) and the Council of Australian University Librarians (CAUL)

. These and other formal and informal networks have proved to be effective for communicating and discussing the vision of national action. I can see at least three other factors that have motivated Australian libraries to work together on digital preservation issues:

- The high likelihood that preserving access to the nation's digital information resources is beyond the capacity of any one institution in this country;

- A concern for survival in what looked like an increasingly hostile world for libraries. If libraries can take a leading role in ensuring ongoing access to their information resources, they should have an edge over potential competitors (expressed by one colleague as the race to prove that these apparent dinosaurs can adapt to the post-collision world);

- A commitment by the NLA to learn by doing and thus to develop policy and practice closely together. This approach has allowed action to start long before all questions are answered, and allowed others to play a limited but valuable role while NLA accepts most of the risks and costs of learning.

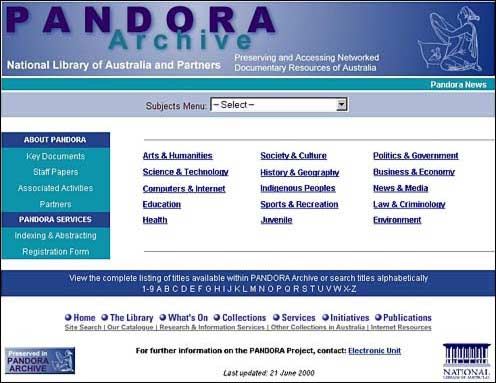

Can you describe the goals of PANDORA? What are the challenges associated with developing such a national archiving and preservation model?

The ultimate goal of PANDORA is to preserve access to a collection of significant Australian digital publications. Through its history some process goals have also been very important: developing a practical model for dealing with the immediate threat of disappearing websites; testing the feasibility of a set of business processes for collecting and managing online digital publications; establishing a workable distributed archive; investigating options for dealing with long-term preservation challenges.

Perhaps another way of putting it is to say that the Library's goals for PANDORA have always been both outcome-oriented (to preserve digital publications), and learning-oriented (to grow in our understanding of how to do it).

It would be nice to only have to work out the goals and then sit back and relax, but as expected there have been many challenges. We could fill this issue of RLG DigiNews with them if we wanted to go into a lot of detail, but these are some of the challenges that have been faced, if not overcome:

- Developing ambitious but sensible vision, working out whether this is our business, and why are we doing it. People don't need to feel guilty about letting this archiving and preservation business go if they don't need to be in it.

- Understanding what we're doing and developing ways of delivering the vision. It's challenging but exciting to be in at the start where no one is able to tell you much about how to do it.

- Working with rights owners; respecting their rights while taking steps to make their creations available to as wide a range of users as possible, over as long a period as possible.

- Actually getting information resources into the haven of the archive before they are lost.

- Working with such a diversity of formats, including things that are just not made to look the same tomorrow as they looked today.

- Recognising and preparing for threats of losing access.

- Defining preservation objectives at all levels right down to: "is it okay if this bit of the file doesn't work?"

- Recognising what will be most cost-effective for dealing with which material in what circumstances, especially when the circumstances haven't happened yet.

- Maintaining credibility while repeatedly saying: "Preservation is critical and must be a top priority, but I can't tell you how we will do it yet."

- In building a distributed archive, reaching arrangements that give all partners a clear understanding of their commitments in an environment of varied expertise, resources, and technical support.

- Seeing how PANDORA fits into a bigger picture, continually drawing boundary circles while having a responsible concern (if not a responsible role) for digital information outside our circle.

- And, getting enough to do the job—enough resources, enough expertise, enough technology horsepower, enough partners, enough management skills, enough brain cells, enough hours in the day.

This very incomplete list looks daunting enough, but we remain optimistically confident PANDORA has a successful future!

PANDORA is built on a selective collection development approach, recognizing that not everything can be saved and archived. How were the selection guidelines developed? Are there any procedures for periodic weeding?

You're right about our selective approach. It's based on a number of premises, only one of which fits the "let ephemera be ephemeral" description. We felt we had to choose between mass harvesting of Web documents without much quality control of the results, or selective collecting with much more control over whether things worked. A selective approach also made it feasible to negotiate for access rights for everything in the archive, something that would be impossible with a mass collecting approach. The Library's decision was to use its resources to underwrite functionality and access, and build a high quality selective archive.

There are some other benefits we get from this selective approach, such as close working relationships with publishers, and an intimate knowledge of what's happening in digital publishing, but the main determinant of our selective approach has been necessity, once we decided we wanted an archive of files that worked and publications that could be used.

I must emphasise that we're also interested in capturing snapshots of the whole Australian Web (while that concept still has some meaning, however blurry), so we are exploring some options for achieving that goal. However, such a step is most likely to be in addition to our high-value selective archiving, not in place of it.

How did we develop our selection principles? Well, your reference to collection development is apt, because our starting point was to recognise our existing collection development policies (CDP) as tools that could tell us what kinds of information we wanted to collect and preserve.

A group of stakeholders across NLA was given the job of determining a set of selection guidelines. The group worked by looking at proposed website documents and deciding whether they had sufficient research merit to be worth saving. At the start, this was a fairly intuitive process informed by our existing CDP for Australian publications. We found we could quite quickly establish principles that would usefully guide us in making later decisions, although we've needed to keep on testing them.

While our underlying selection principles have been quite stable for some years now we need to keep questioning principles such as excluding publications for which there are equivalent print versions available. When we decided to take this line, it reflected the concern about resources I've already mentioned: why use scarce staff time trying to save electronic versions of things we could save much more easily in paper form? That still makes some kind of sense but it is challenged by increasing demand to use digital things digitally.

I suspect we are still working through the question of whether selection for PANDORA means forever. While we may well be forced to address the possibility of de-selection, experience in weeding analogue collections suggests that it is often more expensive to identify material and document the de-selection process than it is simply to keep storing the material. The economics of digital preservation may or may not change that.

In a related vein, we have recently introduced internal guidelines for selecting physical format digital publications (diskettes and CD-ROMs) for preservation action, implying that the non-selected items in the collection will be allowed to fail—a kind of de-selection.

On the other hand, de-selection of archived material down the track will challenge one of our fundamental principles, which is to declare our preservation intentions for each item and to reliably deliver on that commitment while it is in our power to do so.

Australia has a strong electronic archiving community with promising metadata initiatives such as the SPIRT Recordkeeping Metadata Project at Monash University. Is the PANDORA metadata architecture informed by these initiatives? What is the relation and interaction between the library and archival communities (particularly electronic archives) in digital preservation efforts?

My answer is a bit "yes, and no."

PANDORA's metadata architecture has been through a number of evolutions, and at each stage it has been informed by the models we were aware of. Because PANDORA was a fairly early starter, its early data modelling did not have many other models to draw on. And because its architecture seems to work well as we rigorously and repeatedly subject it to internal scrutiny, it has perhaps been less shaped by external models than if we had started later.

Consequently, the relationship between PANDORA and a number of what you rightly call promising metadata initiatives coming out of the Australian archives community is not a particularly detailed or close one, although we have used them as benchmarks against which to test PANDORA's metadata.

These initiatives include the Monash University-led Recordkeeping Metadata Project (federally funded under the Strategic Partnerships with Industry—Research and Training, or SPIRT program); the National Archives of Australia's Recordkeeping Metadata Standard for Commonwealth Agencies 1999, developed in tandem with the Monash project; and the Victorian Electronic Records Strategy project led by the Public Record Office of Victoria.

So far, the contact between library and archives communities on digital preservation has been on a more general level, featuring agreement about the key issues, rather than intimate collaboration on operational details.

I suspect that this period of somewhat separate development, within a context of shared understanding and commitment to action, will prove to be highly beneficial. The purposes and nature of publications and records do differ enough to warrant slightly different approaches; we also need to foster diversity as a way of keeping options available that are sometimes lost in a completely standardised world.

However, the main benefit is likely to be the deep understanding we have each developed of the impact of digital technology on our respective business principles. When we come to a period of greater operational convergence, this will surely help us do it better.

Could you describe the goal and the status of the Digital Services Project as it relates to the Library's digital preservation efforts?

The Digital Services Project (DSP) is meant to give the Library a technical infrastructure to help achieve our objectives in building, managing, accessing and preserving our digital collections.

These collections include the distributed PANDORA archive of online publications, our growing collection of "offline" digital publications issued on physical format carriers, our large collection of oral history audio files, the output of our digital imaging projects, and parts of our NLA website that we want to keep, such as PADI.

The project should help us achieve a consistency of approach and an efficiency of operations that we have sought through all of the system planning and development for PANDORA and for the individual digitisation projects we have undertaken.

Such large projects tend to take on a life of their own, so we've had to keep in mind that the DSP is only a means of achieving our objectives, and not an end in itself. However, it has been tremendously useful in helping us refine those objectives while we think about how we might realise them.

This process has also forced us to think about underlying models such as the Open Archival Information System Reference Model (OAIS) , which we have used as a "conceptual check" for our practical planning. (In turn, it's been invaluable to have something like PANDORA as a "reality check" when we've looked critically at drafts of the OAIS model.)

The DSP has been through three public stages:

- In late 1998 we released an Information Paper and asked for comments.

- In mid-1999, taking account of feedback on the Information Paper, we released a Request for Quotation for a metadata repository and search system, and a Request for Tender (RFT) for an integrated digital collection management system.

- The failure of this RFT to identify a suitable integrated solution to the Library's needs led to a new round in 2000, in which we sought a new collecting system for PANDORA (to be developed in-house); a digital object management system (DOMS) and a digital object storage system (DOSS), both to be procured by a tender process.

The Library is currently nearing the end of its evaluation of tenders for the last two systems, and should announce its procurement/development intentions very soon.

Developments in building a new suite of tools for collecting publications for PANDORA already suggest the DSP will represent a quantum leap for the Library and its PANDORA partners in managing such an archive cost effectively.

However, we are not too starry-eyed about DSP and preservation: it will enable, not fulfil, our preservation goals. There are two very good reasons for this:

- First, we don't know what preservation strategies we will have to use, so it has not been possible to specify them in detail or to evaluate them in any tender response;

- Second, at best DSP will have to be an interim solution, like all current solutions in this field. What it achieves in terms of managing our collections will be significant enough, but like preservation itself, it will have to evolve.

The Library is also keenly watching overseas developments as other national libraries engage in similar infrastructure procurement programs.

There seems to be a great level of interaction and synchronisation among the various divisions of the NLA. For example, we understand that several specialists within the library helped develop the Digital Services Project. What is the strategy of the NLA in developing its digital preservation agenda? What is the role of the Preservation Branch within this effort?

The Library's approach has been very much about ownership of digital management issues right across the organisation, wherever that is an aspect that can be integrated with existing collection management roles.

Sometimes this has meant bringing new expertise in from outside, but more frequently Library staff have been expected to develop a level of expertise that allows them to play at least a significant, and often a leading, role.

Sometimes it has also meant the establishment of specialist sections to look after particular aspects, but these teams are always managed in the context of existing Library organisational structures and are never expected to develop a separate agenda. On issues relevant to digital archiving and preservation, the Library created an Electronic Unit within Technical Services to manage the collecting and processing aspects of PANDORA, a Digital Preservation section within Preservation Services, a manager for the Digital Services Project within IT, and special support teams also within the IT Division. In all cases, the work of these units is guided by steering committees made up of senior staff responsible for delivering the Library's full range of programs.

This approach has paid off in terms of integration, continuity and commitment, even though at various times we've all wished for more efficient ways of doing things, better access to expertise that could solve our problems instantly, fewer matrix teams, fewer meetings, and someone else to blame for delays!

Even after seven or eight years of visible activity in this integrated way, we still think about the question of "who does what?" The Preservation Branch is particularly committed to playing an active role on any issues affecting long-term accessibility of the digital collections. We can't afford the luxury of saying it is none of our business. At the same time, effective action will require skills, equipment, and staff numbers that aren't in place at present. Somehow, we have to make progress—real "make a difference" progress—while also managing our responsibilities for the rest of the collections.

I am enormously encouraged by the history of change in NLA on these issues. My former boss, Maggie Jones, used to worry that Preservation would either be marginalised when it came to preserving the Library's digital collections, or be landed with the lot. At least in part because of her efforts to find a better path, that hasn't happened, and all parts of the Library have done extremely well at working together, drawing on the special skills and perspectives we each have to offer. It's been a fairly remarkable team effort, and I suspect we will just have to keep doing it that way.

Is there a strategic NLA plan for digital preservation? Are there any new digital preservation initiatives brewing at NLA?

NLA managers are expected to get a feeling for just how much strategic planning is needed to guide our strategies, and how much practical experience is needed to guide our strategic thinking.

Many of our road maps are quite public documents, such as the Library's Directions for 2000-2002 or our various Digital Services Project documents. We also have a number of internal planning documents relating specifically to digital preservation. We are only now attempting to put together a policy document that really says what we plan to do. It will contain a lot of uncertainties, because many challenges remain unsolved. However, the document should help us bring together all the apparently disparate strands of activity we need to take.

To give some flavour of where we're going: there are some long-standing projects and needs that are moving to fruition, such as a concern to give useful advice to creators on making their publications archivable (see Safeguarding Australia's Web Resources: Guidelines for Creators and Publishers); working with RLG and OCLC on development of a preservation metadata standard; deciding on a suitable system of persistent identifiers that will work for Australian libraries; formalising our data recovery procedures (especially helpful for manuscript materials on old diskettes); and testing our understanding of both migration and hardware emulation strategies for our collections.

At the same time, we need to take some new steps, including working with some new partners in archiving digital publications, especially universities; building a better map of what digital information is being created and who, if anybody, is looking after it; developing virtual or actual software archives; and improving our ability to work with others in Australia in reaching decisions about our long-term preservation commitments.

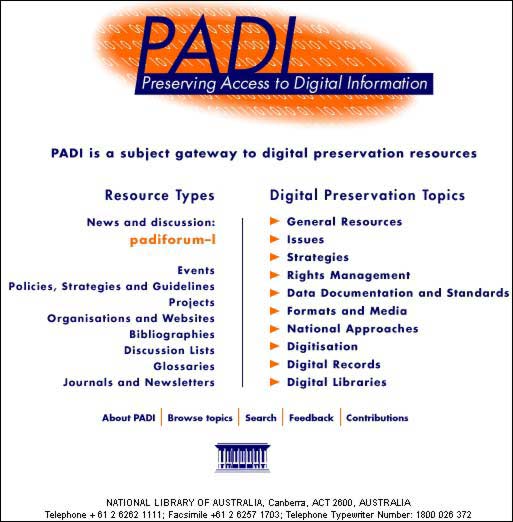

The NLA has been a big supporter of information sharing. For example PADI was developed by the NLA as an international forum to share information. What are the future plans for this resource?

We're convinced that information sharing is critical for institutions and individuals struggling with digital preservation issues. PADI is just one manifestation of NLA's commitment to open and focused communication in this area.

Ultimately we need to do a lot more than just share information, so a resource like PADI is not meant to be an end in itself, but a way of facilitating well-informed decisions. The vision behind PADI has always been to get the available ideas and approaches lined up together so we can understand and evaluate them, and perhaps see new possibilities.

PADI (which stands for Preserving Access to Digital Information) grew out of an Australian group representing a range of sectors concerned with preserving electronic information resources. By 1998 it had become apparent that PADI needed more focused attention if it was to go forward, so NLA decided to invest solidly in both the human and technical resources supporting it. In improving its presentation, coverage and sustainability, we also decided that we had to take serious steps to improve the international ownership of PADI, inviting a number of prominent experts with proven commitment to digital preservation to form an International Advisory Group (IAG). Members of the IAG have been extremely helpful as a sounding board on strategic issues (like where to set the boundaries of relevance), and as friends actively promoting PADI.

There are three new(ish) directions we are currently developing:

- PADI Update is a new Web-based application that will allow registered users anywhere in the world to remotely maintain and add to the PADI database;

- formalising closer working relationships with a number of overseas programs interested in PADI's goals and willing to take collaborative action with PADI—we hope to announce details in the next few months;

- reaching agreements with owners of selected information resources available through PADI regarding the archiving of those resources.

There are also some areas of weakness in PADI that we especially want to address. The first is the likelihood that users without a background in digital preservation find the Web site dense and hard to understand. This is important to NLA because PADI's intended user group includes people who are just starting to look at digital preservation programs in areas of emerging digital publishing activity. (As a regional centre for the IFLA Preservation and Conservation Program, NLA is especially interested in such users in Australia, the Pacific, and South East Asia, but there must be many such potential users all over the world.)

We are currently working on ways of making PADI more useful to such users early in their thinking.

A second area of weakness is at the heart of PADI's purpose, in encouraging the comparison of approaches, the evaluation of progress, and the identification and filling of gaps in our shared knowledge. Eighteen months ago NLA staff prepared the first in a series of annual reviews of progress—at least that was our hope—but we haven't found the time to build on that paper, ("The Moving Frontier: Archiving, Preservation and Tomorrow's Digital Heritage"), presented to the VALA2000 conference in Melbourne), or been successful in encouraging others to use PADI as the basis for similar papers.

Finally, the public discussion list associated with PADI, padiforum-l, is meant to be a vehicle for much more informal discussion of digital preservation issues and ideas. The list is a very light traffic lane on the information superhighway, and we want to consider whether there are things that would make it a more effective forum.

In the VALA2000 paper you mentioned, co-authored with Hilary Berthon, you strongly agreed with Marc Fresko's statement that ".… if digital preservation were an iceberg, the cultural world is concentrating uniquely on the visible parts, ignoring the much bigger problems below the water line." Can you elaborate on this point?

What appealed to us about Marc's iceberg image was the inference of apparently large but photogenic challenges needing to be negotiated, with hidden dimensions just waiting to rip the side out of your boat and send your digital information passengers and your enthusiastic archiving crew to the bottom. The metaphor doesn't work completely, of course—we actually do need to get close to this iceberg, not steer away from it—but it does remind us that the current pressing challenges of establishing and building digital archives are not the end of the story. We have to give more attention to dealing with the threats of losing access over time. Without investing in those longer-term challenges, today's achievements in archive-building will be a very short-term affair. (Anyone like to swap deckchairs?) The metaphor has also become a bit dated in the couple of years since Marc made his statement: attention to the monster below the water line has increased quite a lot.

What are the key research areas that need to be explored to support long-term access to digital information? Are there any areas that are not getting enough attention or are being neglected?

The main areas where I would like to see more progress include:

- Making hardware emulation work. After years of scepticism I have been recently convinced that Jeff Rothenberg's approach has a lot going for it, although it is still a long way from an off-the-shelf, scaled solution that will meet our needs.

- Understanding when format migration works well enough.

- Understanding what needs to be in place for these or other strategies to work. I guess this is about thinking through the implications of applying particular strategies.

- Better indicators for action. Working with a very complex archive of diverse file formats, it's likely that NLA will need substantial lead times if it is to minimise the size of the technology museum it has to maintain for accessibility and the amount of data recovery it has to attempt for things that become inaccessible.

- Even though we don't want to rely on technology preservation or data recovery, they have both been useful. We need to understand how to manage them better.

- The levels of redundancy we need for safety.

- Skills and organisational structures we need.

- Models of accountability for distributed archiving.

- Understanding specific formats, the challenges they present, and how we might deal with them.

There's quite a lot there, even in that incomplete list. While people are working on each one of them, there seems to be a lot to do before their work bears fruit as implemented strategies in this country.

Thanks, Anne and Oya, for the chance to air some of these issues from an NLA perspective in RLG DigiNews.

![]() Archiving and Preserving

PDF Files, John Mark Ockerbloom

Archiving and Preserving

PDF Files, John Mark Ockerbloom

Digital Library Architect and Planner, University of Pennsylvania

ockerblo@pobox.upenn.edu

Since its release in mid 1993, Adobe Portable Document Format (PDF) has become a widely used standard for electronic document distribution worldwide in many institutional settings. Much of its popularity comes from its ability to faithfully encode both the text and the visual appearance of source documents, preserving their fonts, formatting, colors, and graphics. PDF files can be viewed, navigated, and printed with a free Adobe Acrobat Reader, available on all major computing platforms. PDF has many applications and is commonly used to publish government, public, and academic documents. Many of the electronic journals and other digital resources acquired by libraries are published in PDF format.

As libraries grow more dependent on electronic resources, they need to consider how they can preserve these resources for the long term. Many libraries retain back runs of print journals that are over 100 years old, and which are still consulted by researchers. No digital technology has lasted nearly that long, and many data formats have already become obsolete and not easily readable in a much shorter time period. This document discusses ways that libraries can plan for the preservation of electronic journals and other digital resources in PDF format. After a brief discussion of the file specifications and the future plans for PDF, the article focuses on issues related to preservation of PDF files.

Description of PDF

PDF is a platform-independent document format developed by Adobe as a follow-up to its Postscript language (which is now used almost universally in graphics-capable printers). Although Postscript was designed specifically for printing, PDF is meant to support on-line, secure, interactive use, as well as printing.

PDF combines attributes of structured text formats and traditional image formats, so that a document can be used both in terms of its text and in terms of its "look" on-screen or on the page. This allows PDF readers not only to display and print documents, but also to carry out text-oriented operations such as searching for text strings, or cutting-and-pasting. (However, as we will see below, it is also possible for a PDF document to consist only of uninterpreted page images—that is, pictures of the text but no machine-readable encoding of the text itself.)

Like Postscript, PDF is, at its heart, a page description language. However, unlike Postscript, it is more of a declarative than a procedural language (which is to say, the data is not wrapped up in program code as it is in Postscript). This makes PDF easier to manipulate and analyze than Postscript.

As with Postscript, the specification of PDF is openly and freely published (1), but Adobe controls its development. Three revisions of PDF have been published to date, with PDF 1.3 the latest version for which specifications are published. (Adobe Illustrator 9, released in 2000, outputs files in PDF 1.4, a version whose specification has not yet been published.) So far, all of the revisions for which specifications have been published are backward-compatible (that is, if you can understand PDF 1.3, you can also understand PDF 1.1 and 1.2.) Because the basic standard is public, Adobe does not have a monopoly on PDF tools, and third parties have developed tools that also work with PDF. However, because the standard is complex, and changes from time to time, much of the support for the format, as well as the tools that are considered to set the standard for PDF use, comes from Adobe.

A typical PDF document consists of a sequence of pages, each of which includes data that indicates what should be "drawn" on a particular page. This data includes text, font specifications, margins, layout, graphical elements, and background and text colors. A PDF file does not always contain the full glyph specification of characters in the document, but at least includes the name of the fonts used and specifies the space all characters will occupy. PDF includes metadata on the document, specifying things such as page size, page numbering, and a structured table of contents. Add-ons, such as hyperlinks, forms, and even pieces of scripting code in Java or Postscript that control dynamic displays or the user interface, can also be included.

Not all PDF documents encode the text of a document directly. It is possible to embed images of a page (or of anything else) in PDF, so that a picture of text is encoded but not the text itself. Some scanners, for instance, can output this "uninterpreted" form of PDF. Such uninterpreted PDF files tend to be much larger than PDF files that encode text directly as characters, and text-oriented operations such as searching and textual copying and pasting are not supported by these files. Still, some libraries have produced these large, uninterpreted PDF files for their users as a way of quickly and inexpensively distributing scans of print materials. For applications such as electronic reserves, where scanned copies are typically not kept beyond a semester or two, this form of PDF can be acceptable. Some programs also exist that will attempt to recognize whatever text they can in a scanned PDF document, and replace the images of this text with a briefer encoding of the characters and fonts. Such programs tend to produce "hybrid" PDF files where encoded text strings in appropriate fonts are positioned inside page images, and any unrecognized characters are kept on the page in the form of images. Although such PDF files can be smaller than those that consist only of uninterpreted page images, they also often have only partial, and choppy, encoding of their underlying text, because recognition programs typically cannot recognize 100% of the text in a typical page scan. Moreover, misrecognized characters may even introduce errors into the "hybrid" document.

PDF was originally designed for 8-bit character sets (which can support at most 256 characters). Some limited support for Unicode also exists, but the format has not yet taken full advantage of Unicode's capabilities. One consequence of this design is that some multilingual documents may be represented by switching between different, sometimes customized, 256-character sets (each with its own font). Alternatively, some "exotic" characters may be represented as small images, rather than as text.

Unlike XML, PDF was not originally designed for arbitrary structural annotations, although the last revision of PDF includes some facilities for user-defined structural markup. PDF is a less efficient format for text analysis than purely text-based formats such as XML or HTML, and readers have noted that searching for words within a PDF viewer can be noticeably slower than searching for words in an HTML viewer. The central role that appearance plays in PDF is both a strength and a weakness; it allows publishers to specify exactly how they want a document to look, but it also makes it difficult for users to reformat or repaginate the document if that would be better for them.

PDF includes facilities for encrypting content, or directing that a document not be printable. It is possible to use encryption so that a document cannot be decrypted without a password. However, once the document is decrypted, it is possible, in theory, to make an unlocked copy that can be freely copied, read, or printed. Even if it is technically possible to do so, though, legal and practical restrictions to doing so may still exist. (2)

The Future of PDF

As data formats go, PDF is particularly likely to be supported for a long time, and to spawn migration paths. This has been the case with Adobe's Postscript, which is now an industry standard, and for which PDF itself can be seen as the next step in migration. Adobe is highly invested in the success of PDF, but even if Adobe fails or abruptly changes course, a community of third-party tools for handling PDF has started to emerge. PDF is used widely by many well-funded bodies (including the U.S. government, which is now using PDF as its standard way of distributing government publications) so there should be widespread support for using and migrating PDF, should Adobe fail to provide adequate support for the format.

Even so, it is likely that PDF will one day be superseded by another format. It may be a successor format (as PDF is to Postscript), or it may be a completely different format that users prefer over PDF. Hence, it is necessary to have migration strategies planned for PDF.

Migrating PDF

Currently, there is no other format that can encompass all of the features of PDF in a form that standard tools can interpret. However, it is possible to easily migrate two important aspects of PDF documents: the page images and (for many types of PDF files) the text. These aspects may be sufficient for the types of preservation that libraries require.

Any program that displays or prints PDF files in effect converts PDF pages into static page images. Most page image formats are pixel-oriented, where pictures are encoded using a grid with each grid cell (known as a pixel) associated with a particular color (or with no added color). Some image formats, including PDF and Postscript, are stroke-oriented, encoded with instructions about lines to plot, regions to color, and text to place. They can also include instructions to embed pixel-oriented images at specified locations. Pixel-oriented formats have a well-understood mathematical representation, making them easy to migrate. Stroke-oriented image formats are not so standardized. They can encode information about the structure of a picture, such as the lines that are drawn in it, that can only be approximated to a certain resolution in purely pixel-oriented formats. On the other hand, since pixel-oriented formats can typically accommodate very fine resolutions, an image converted from a stroke-oriented format to a pixel-oriented format at fine enough resolution can be visually indistinguishable from its original.

Third-party software exists to turn PDF both into Postscript, and into pixel-oriented image formats. Converting from PDF to most image formats loses direct encoding of the text (making searching or cutting-and-pasting infeasible unless the text is OCR'd.) Structural information and other PDF extras would also be lost in such a conversion.

PDF can also be converted into text, at least when the text (rather than pictures of the text) has been directly encoded in PDF files. Formatting and layout information would be lost in a straight text conversion, and the text conversion of certain pages with complex layout may not always be read in "logical" reading order, without human intervention. For example, if a PDF encoding of a two-column page first renders the left column and then the right, a simple text extraction from the PDF will yield a readable text transcription. On the other hand, if the PDF encoding goes back and forth between the two columns (as is possible in PDF, where drawing operations on a page can be defined in any order), or renders the right column before the left column, programs may have a much more difficult time reconstructing the text in the order it was meant to be read. Different PDF-generating programs may be more or less accommodating about rendering PDF text in a logical reading order. A straight text conversion would also lose structural information and other PDF extras, as well non-textual items such as pictures, diagrams, and possibly equations. However, it is possible to write conversions to HTML or XML that would include a "table of contents" header that incorporated the structure of the PDF document, and that would preserve certain types of PDF hyperlinks. Text in non-European languages may also be difficult to migrate correctly, because of the previously-described limitations of PDF's support for Unicode, unless a standard set of well-defined fonts, with widely available specifications, is used to represent non-European characters.

Although it is possible to migrate the appearance of PDF files, or the text, migrating both of them into a single document would be more difficult. The only other formats commonly used to specify both the text and the appearance of documents are word-processor formats, and those tend to be proprietary, system-dependent, and more ephemeral than PDF itself is likely to be. Converting to these formats may also lead to subtly different appearances, depending on the implementation of the word processing program. RTF (Rich Text Format) may be the best target for word-processor-oriented conversions at this time, though it is far from a perfect choice.

Even if it is infeasible to migrate both the text and the look of PDF into a single document, it may still be possible to migrate PDF documents into a presentation that allows one to switch between text-oriented and image-oriented views of the same document. (Some on-line book projects now support this type of view for their titles. For example, some documents from the Library of Congress' American Memory project include both page images and transcriptions, with hyperlinks placed between corresponding pages of each version.)

In theory, it is possible to convert all of the information in a PDF file into an XML format with appropriate tags and attributes. However, at present there is no standard XML format used for representing PDF-like documents. Unless such a format were standardized, and then supported with software, converting to XML might be largely an academic exercise, since no software currently exists to interpret such a format, and software developed especially to interpret the new XML format could probably just as easily interpret the information in its original PDF form. On the other hand, an XML-based form of PDF might be easier for existing XML-based search and analysis software to process.

Interactive features of PDF would be harder to migrate, and Postscript, JavaScript, or other scripting snippets may be impractical to migrate on a large scale, because it is much harder to migrate program code than data. Libraries may want to avoid committing to preserving these "extra" features of PDF files, except in carefully limited cases.

Archiving PDF Documents

Certain preservation techniques, such as integrity checks and backups, are necessary to preserve any type of digital information. Beyond those basic requirements, institutions need three things to reliably preserve PDF-format documents so that they can be reused in perpetuity:

1) A specification of exactly what an institution is committing to preserve.

2) Quality control over the PDF documents acquired, to ensure that the format specifications (e.g., type of encoding, use of embedded scripting and encryption, etc.) match the archiving institution's guidelines.

Institutions that create PDF files meant for archiving, or who receive them from publishers, need to employ policies that ensure that the PDF they acquire is easy to migrate. In particular:

In addition to establishing standards for acquired PDF files, institutions may also find it useful to have tools to automatically check incoming PDF files and warn of any unexpected PDF constructs appearing in them. Such automated checks could be used to automatically flag malformed PDF files, or files that failed to meet institutional encoding standards, while avoiding expensive manual checks on all incoming files. However, writing such tools, or buying suitable tools developed by third parties, may also be nontrivial. Institutions with a common interest in preserving PDF may wish to collaborate on the development of such tools.

3) Tools and procedures for migrating PDF into other formats.

Some software tools exist for extracting text and images from PDF files, and can be used for migration. Especially useful are tools that can be invoked automatically without human interaction. Examples in the free software world include Ghostscript for image migration, and Pstotext or Prescript for text migration. Some commercial conversion tools exist as well. Further research and development may be necessary to ensure that these conversion tools will correctly migrate all of the PDF files of interest to institutions. At present, image-based conversion does not appear difficult to complete and verify, but automatic extraction of text, due to the nature of text layout, may not always be perfect. One possible technique for increasing the reliability of automatically extracted text would be similar to the "double-keying" principle for data entry: run two independently developed text extractors against the same PDF file, and flag points where the extractors yield different text in a different order. It remains to be seen how useful this technique would be in practice.

It may be useful to run migration routines on new PDF files as soon as they are acquired, even if migrated files are not yet required. After the migration is attempted, checks could be run to see if any problems were detected in the process. Institutions do not necessarily have to keep migrated copies of the files, as long as they have verified that migration works, and they preserve the original PDF files and the programs that will perform the migration (along with any necessary operating environment for these programs).

If one were being especially careful, though, it may be advisable to save alternative forms immediately, not just in digital form, but also in print form. Although such practices would further decrease the probability of total loss of PDF documents, they also may substantially increase the per-volume cost of preserving these documents. It is probably sufficient to have trusted methods of backup, integrity checking, mirroring, and migrating.

In summary, it is reasonable, given careful techniques such as those described above, for institutions to collect documents in PDF format with the expectation that they can be archived and preserved indefinitely, even as computer technology and standards advance. Some further experimentation and development is necessary for establishing reliable techniques for migration. We plan to engage in such experimentation and development in connection with a Mellon Foundation funded journal archiving project at the University of Pennsylvania Library. We would be very interested in sharing experiences and expertise with partner institutions.

Footnotes

(1) The most recently published PDF specification at this writing, for PDF 1.3, can be found at http://partners.adobe.com/asn/developer/technotes/acrobatpdf.html

(2) It is technically possible to undo any purely software-based copy-protection scheme, given sufficient resources to reverse-engineer and write tools for unlocking the copy-protected format. Undoing copy-protection will not in itself make an encrypted file interpretable, but if a file can be decrypted in software for display, it can also be decrypted to bypass its original access restrictions. Such decryption is unlikely to be supported by Adobe's standard tools, and the legal restrictions imposed by the Digital Millennium Copyright Act, or the proposed Uniform Computer Information Transactions Act (UCITA), may legally proscribe such actions. At the same time, doing so may sometimes be the only way of keeping a PDF file readable if changes in the computing environment make it infeasible to read in its original form. Librarians concerned about this issue may need to work to ensure that the law, and their contracts with electronic data providers, does not prohibit them from preserving their electronic data in this way.

|

Highlighted Web Site NEDLIB

|

FAQ

I've read a lot about the metadata work being done in digital library projects, but I know the archives community has been active in this area as well. Can you point me to key efforts by archivists that may also be of interest to digital librarians?

In the past decade, archivists have been heavily involved in designing electronic recordkeeping systems, and have made progress toward standards for digital archives. As Colin Webb indicated in the Editor's Interview, digital libraries also recognize the need for effective recordkeeping systems to ensure long-term preservation and reliable access to digital collections. Thus, while the specific needs of librarians are somewhat different from archivists and records managers, librarians should be aware of the electronic recordkeeping tools that are beginning to emerge from the archives community. Discussed below are four influential research projects on archival metadata, which may suggest some points of convergence with the parallel development of digital libraries. (1) Also, to stay generally up-to-date with what is happening in the archives community, you may want to examine some of the projects and strategic planning efforts sponsored by the National Historical Publications and Records Commission (NHPRC). (2)

1. University of Pittsburgh: Functional Requirements for Evidence in Recordkeeping Project (1994-96)

This groundbreaking project on electronic recordkeeping, funded by NHPRC, produced a comprehensive framework for use in designing recordkeeping systems, which librarians may find helpful to study, both for its content and for the systematic approach used by the Pittsburgh researchers. The Framework for Business Acceptable Communications (BAC) established generic guidelines in four key areas. First was the identification of a literary warrant for electronic recordkeeping, based on existing legal rules, professional standards and organizational best practices. The Pittsburgh project did not compile a literary warrant for libraries, but the functional requirements could be used as a starting point for drafting one. Second, the BAC includes a general set of functional requirements for record keeping systems. Librarians may also be interested in examining how the basic functional requirements were translated into a detailed set of production rules—the third component of the framework—the enforcement of which could potentially be automated within an electronic record keeping system. Fourth, the project compiled a detailed specification for metadata to satisfy the functional requirements for electronic recordkeeping.

The Pittsburgh project has inspired a number of follow-on efforts to implement and test the BAC framework. Notable efforts include the Philadelphia Electronic Records Project, and the Indiana University Electronic Records Project, which produced its own modified set of functional requirements and metadata specifications for managing the university's electronic records.

2. University of British Columbia: The Preservation of the Integrity of Electronic Records Project (1994-97)

This project addressed the problem of managing active electronic records (still in use by the creators) in a way that can guarantee archival reliability. The Preservation of the Integrity of Electronic Records (commonly known as the UBC project) consciously sought to apply the traditional archival principles of diplomatics (defined by the UBC researchers as "the study of the genesis, inner constitution and transmission of archival documents, and of their relationship with the facts represented in them and with their creator") to the management of electronic records. The UBC researchers were concerned with defining the essential metadata an active electronic record must have to be considered reliable as an archival record. The results of this project may be of some interest to digital libraries, especially as they become more active in enforcing copyrights for electronic resources. (3) Also, the UBC project members worked with the U.S. Department of Defense to create an important standard for records management software applications, some features of which may be of interest to digital libraries considering purchasing library management applications.

3. International Research on Permanent Authentic Records in Electronic Systems (InterPARES), (1999-present)

Although the UBC project has been much talked about in the archives community, librarians concerned with digital preservation may be more interested in following the ongoing work of the InterPARES project, a large international effort—organized by UBC—that is specifically addressing challenges of long-term preservation and the management of inactive records held by an archival repository.

The InterPARES project represents the largest and most comprehensive effort to date to ensure the authenticity and long-term preservation of electronic records held by archives. (4) This work began with the assumption that preservation and authenticity would require more than a set of technical approaches for migrating data or emulating electronic systems. Like the UBC project, InterPARES has sought to apply diplomatics in an effort to define the scope of electronic records in a way that is consistent with the traditional contents of paper records. Thus, the project's research agenda has included work on:

Final results from InterPARES are expected in late 2001, and should be worthwhile for digital librarians to consider closely as they plan for their own digital preservation needs.

4. Monash University: Strategic Partnerships with Industry Research and Training (SPIRT) Recordkeeping Metadata Research Project (1998-1999)

In recent years, the archives community in Australia has made vital contributions to electronic archives by applying its "continuum" approach to the management of electronic records. The records continuum approach holds that the reliability and authenticity of records depends heavily on the ability of recordkeeping systems to capture contextual information as metadata. In this view, records management is considered a holistic activity, requiring archivists to maintain close links between the records and the business transactions in which they were created. For digital libraries, the records continuum is of interest because it emphasizes the multiple roles played by records, as sources of information as well as organizational accountability.

In this context, the SPIRT Recordkeeping Metadata Project, led by researchers at Monash University, has been at the forefront of efforts to integrate different but overlapping metadata sets. The main outcome of the project is the Australian Recordkeeping Metadata Schema (RKMS), which is a "framework standard" for creating metadata sets for use in domain-specific recordkeeping systems. The RKMS emphasizes the need to document business transactions by maintaining close links between the agents involved in a business process, the specific transactions they carry out, and the resulting records. The framework includes its own general set of recordkeeping metadata elements, which the project team mapped against a variety of metadata standards. (See the table below.) This work represents an important step toward more flexible and interoperable metadata systems, making it well worth further study by digital librarians.

SPIRT Project's Conceptual Mapping to other Metadata Schema

| Metadata Schema | Archival Function |

| Encoded Archival Description (EAD) | archival description—syntax standard |

| General International Standard Archival Description, ISAD(G) | archival description—content standard |

| International Standard Archival Authority Record for Corporate Bodies, Persons and Families, ISAAR(CPF) | archival description—content standard |

| Recordkeeping Metadata Standard for Commonwealth Agencies (NAARKS) | records management—active records |

| Pitt project: Business Acceptable Communications (BAC) | records management—active records |

| Victorian Electronic Records Strategy (VERS) metadata specification | records management—active records (related to the Pitt project) |

| UBC project: The Preservation of the Integrity of Electronic Records | records management—active records |

| U.S. Dept. of Defense: Standard for Electronic Records Management Software Applications | records management—active records (related to the UBC project) |

| Commonwealth Record Series (CRS) System | archives—inactive records (with contextual metadata) |

Source: Glenda Acland, Kate Cumming, and Sue McKemmish, "The End of the Beginning: The SPIRT Recordkeeping Metadata Project" (1999).

Footnotes

(1) On the issue of convergence between digital libraries and archives, see Anne J. Gilliland-Swetland, Enduring Paradigm, New Opportunities: The Value of the Archival Perspective in the Digital Environment, Council on Library and Information Resources (2000).

(2) For a current bibliography of NHPRC-sponsored projects on electronic records, see http://is.gseis.ucla.edu/us-interpares/NHPRCbib.htm. For information on NHPRC's strategic planning, see Research Issues in Electronic Records (1991); Electronic Records Research and Development: Final Report of the 1996 Ann Arbor Conference (1997), and the NHPRC's 1997 Strategic Plan.

(3) For an extended discussion of these issues, see Peter B. Hirtle, "Archival Authenticity in a Digital Age," in Authenticity in a Digital Environment, Council on Library and Information Resources (2000).

(4) See Anne J. Gilliland-Swetland and Philip B. Eppard, "Preserving the Authenticity of Contingent Digital Objects: The InterPARES Project" D-Lib Magazine vol. 6, no. 7/8 (July/August 2000) for a thorough introduction to the project.

—PKB

Calendar of Events

National

Initiative for a Networked Cultural Heritage/College Art Association

Copyright Town Meeting

March 3, 2001, Chicago, IL

This NINCH/CAA Copyright Town Meeting is devoted to intellectual property that

has been licensed for educational and scholarly use. It will focus on the distribution

of copyrighted and other materials created to meet the current and emerging

needs of university artists, librarians, and art historians.

Museums and the Web

2001

March 14-17, 2001, Seattle, WA

In its fifth year, Museums and the Web 2001 will focus on the state of the Web

in arts, culture, and heritage. Speakers will present their ideas in sessions

and panels that explore theory and practice at both basic and advanced levels.

Pre-conference workshops provide in-depth study of methods and issues. Exhibits

will feature new services, tools, and techniques to assist in creating and maintaining

your Web sites.

Issues in Digital Librarianship: Accessing the Future

April 2-3, 2001, London, England

Organized by OCLC, the British Library, and the National Preservation Office,

this conference will bring together leaders of the major national and research

libraries in digital librarianship: to identify and define key issues facing

libraries as a result of technological innovation; to create a shared awareness

of trends, activities, resources, and skills; and to identify gaps. For further

information contact: The National Preservation Office, npo@bl.uk.

Announcements

Going

Digital: Issues in Digitisation for Public Libraries

Written by Neil Beagrie, Joint Information Systems Committee (JISC), the

goal of this paper is explore some of the key issues involved in developing

digitization projects in public libraries. Topics include initial planning and

implementation phases of a digitisation project, the key stages, and sustainability.

Digital Production Centre

Established at the University of Amsterdam

The Digital Production Centre (DPC) provides support to create, make available,

and to archive electronic publications and databases. The DPC works closely

with authors, offers technical support to create electronic publications, and

supports an infrastructure to make this material available.

Safeguarding

Australia's Web Resources: Guidelines for Creators and Publishers

The National Library of Australia has produced a set of guidelines on creating,

describing, naming and managing Web resources to facilitate their ongoing use.

The recommended practices will make it easier to carry out future preservation

actions and to maintain continued access to important resources.

Handbook for

Digital Projects: a Management Tool for Preservation and Access

The Northeast Document Conservation Center (NEDCC) announces the online

availability of a new Web resource. The handbook was published to meet the needs

of museums, and other collections holding institutions for basic information

about planning and managing digital projects. Topics covered include rationale

for digitization and preservation, vendor relations, and overview of copyright

issues.

Preservation

Metadata for Digital Objects: A Review of the State of the Art

The OCLC/RLG Working Group on Preservation Metadata has made available a

white paper, which aims to identify and support the best practices for the long-term

retention of digital objects.

Changing

Trains at Wigan: Digital Preservation and the Future of Scholarship

The National Preservation Office has published this article by Dr Seamus

Ross, of the Humanities Advanced Technology and Information Institute, University

of Glasgow. The paper examines the impact of the digital landscape on long-term

access to material.

Spanish

Version of Cornell's Digital Imaging Tutorial is Released

The Department of Preservation and Conservation of Cornell University Library

announces the release of the Spanish version of its online digital imaging tutorial,

Moving Theory into Practice. Although designed as an adjunct to the recently

published book

and workshop

series known by the same name, the tutorial can also serve as a standalone introduction

to the use of digital imaging to convert and make accessible cultural heritage

materials. The Spanish translation of the tutorial was funded by the Council

on Library and Information Resources (CLIR).

RLG News

Joint RLG and OCLC Long-Term Retention Initiatives Move Forward

As announced in a March 2000 press release, RLG and OCLC have begun to collaborate on two working documents to establish best practices for the long-term retention of digital resources. Two key areas of digital archiving infrastructure are being addressed: the attributes of a digital archive and the development of a preservation metadata framework applicable to a broad range of digital preservation activity.

Both areas are being tackled by working groups comprised of key players and recognized experts in the archiving arena. Starting with white papers documenting "the state of the art," working group members will review progress to date and identify common practices among the most experienced institutions and organizations.

The working group on Preservation Metadata for Long-Term Retention has been working to document approaches for descriptive and management metadata needed in the long-term retention of digital files. This working group is accomplishing its goals through a series of five phases, corresponding with the following activities:

To launch the work of this group, a white paper was written by Brian Lavoie, OCLC. The white paper, Preservation Metadata for Digital Objects: A Review of the State of the Art, describes the current practices and theories on the use of metadata to support digital preservation. Over the next nine months, the five phases of work will progress with an anticipated completion date of December 2001. Further reports and findings of the working group will be made available through the Web site.

Using the emerging international standard of the Reference Model for an Open Archival Information System (OAIS) as a foundation, the Working Group on the Attributes of a Digital Archive for Research Repositories has been tasked will creating a definitional document which describes the characteristics of a sustainable digital archive for large-scale, heterogeneous collections held by research repositories. A report is underway and will also identify tools—where they exist—that will support institutions in seeking or building archiving services, as well as identify tool sets in need of development. A draft of the document will be ready at the beginning of May 2001. Notification of a public comment period for the draft will be announced on relevant discussion lists and input is highly encouraged. Following a six-week comment period, the working group will revise the document with a goal of issuing a final report at the beginning of September 2001.

For further information about the working groups and their progress, please see the web sites mentioned above or contact Robin Dale or Meg Bellinger.

Hotlinks Included in This Issue

Editor's Interview

Council of Australian State Libraries

http://www.casl.org.au/

Council of Australian University

Librarians http://www.anu.edu/caul/index.html

Digital Services Project http://www.nla.gov.au/dsp/

Library's Directions

for 2000-2002 http://www.nla.gov.au/library/directions.html

Monash University-led Recordkeeping

Metadata Project http://www.sims.monash.edu.au/rcrg/

The Moving Frontier:

Archiving, Preservation and Tomorrow's Digital Heritage http://www.nla.gov.au/nla/staffpaper/hberthon2.html

National

Archives of Australia's Recordkeeping Metadata Standard for Commonwealth Agencies

1999 http://www.naa.gov.au/recordkeeping/control/rkms/summary.htm

Open Archival

Information System Reference Model http://ssdoo.gsfc.nasa.gov/nost/isoas/us/overview.html

PADI http://www.nla.gov.au/padi/

RLG

DigiNews Editors' Interview http://www.rlg.org/preserv/diginews/diginews4-4.html#feature1

Safeguarding

Australia's Web Resources: Guidelines for Creators and Publishers http://www.nla.gov.au/guidelines/2000/webresources.html

SPIRT Recordkeeping Metadata Project

at Monash University http://www.sims.monash.edu.au/rcrg/

Victorian Electronic Records

Strategy project http://www.prov.vic.gov.au/vers/welcome.htm

Technical Feature

Ghostscript http://www.ghostscript.com/

Jeff Rothenberg's

approach http://www.clir.org/pubs/reports/rothenberg/contents.html

PDF

1.3 http://partners.adobe.com/asn/developer/technotes/acrobatpdf.html

Prescript http://www.nzdl.org/html/prescript.html

Pstotext

http://www.research.compaq.com/SRC/virtualpaper/pstotext.html

Highlighted Web Site

Nedlib http://www.kb.nl/coop/nedlib/homeflash.html

FAQ

Ann

Arbor Conference http://www.si.umich.edu/e-recs/Report/FR0.TOC.html

Archival

Authenticity in a Digital Age http://www.clir.org/pubs/abstract/pub92abst.html

BAC framework

http://www.sis.pitt.edu/~nhprc/IApps.html

Council

on Library and Information Resources

"The

End of the Beginning: The SPIRT Recordkeeping Metadata Project," http://www.clir.org/pubs/abstract/pub89abst.html

"Functional Requirements

for Evidence in Recordkeeping" Project http://www.sis.pitt.edu/~nhprc

Indiana University

Electronic Records Project http://www.indiana.edu/~libarche/index.html

InterPARES http://www.interpares.org/

Philadelphia

Electronic Records Project http://www.phila.gov/departments/records/Divisions/RM_Division/RM_Unit/PERP/PERP.htm

"The Preservation

of the Integrity of Electronic Records" http://www.interpares.org/UBCProject

Preserving

the Authenticity of Contingent Digital Objects http://www.dlib.org/dlib/july00/eppard/07eppard.html

Research Issues

in Electronic Records http://www.nara.gov/nhprc/eragenda.html

SPIRT

http://www.sims.monash.edu.au/rcrg/publications/archiv01.htm

Strategic Plan

http://www.nara.gov/nhprc/strategy.html

U.S. Department of Defense http://jitc.fhu.disa.mil/recmgt

Calendar of Events

Museums and the Web

2001 http://www.archimuse.com/mw2001/

National

Initiative for a Networked Cultural Heritage/College Art Association http://www.pipeline.com/~rabaron/ctm/CTM.htm

Announcements

Changing

Trains at Wigan: Digital Preservation and the Future of Scholarship http://www.bl.uk/services/preservation/occpaper.pdf

Digital Production Centre

Established at the University of Amsterdam http://www.uba.uva.nl/en/dpc/

Going

Digital: Issues in Digitisation for Public Libraries http://www.earl.org.uk/policy/issuepapers/index.html

Handbook for Digital

Projects: a Management Tool for Preservation and Access http://www.nedcc.org/digital/dighome.htm

Preservation

Metadata for Digital Objects: A Review of the State of the Art http://www.oclc.org/digitalpreservation/presmeta_wp.pdf

Spanish Version

of Cornell's Digital Imaging Tutorial http://www.library.cornell.edu/preservation/tutorial/

RLG News

March 2000 RLG

and OCLC joint press release http://www.rlg.org/pr/pr2000-oclc.html

Reference Model

for an Open Archival Information System (OAIS) http://www.ccsds.org/RP9905/RP9905.html

Preservation

Metadata for Digital Objects: A Review of the State of the Art http://www.oclc.org/digitalpreservation/presmeta_wp.pdf

Working

Group on Preservation Metadata for Long-Term Retention http://www.oclc.org/digitalpreservation/wgmetadata.htm

Working Group

on Attributes of a Digital Archive for Research Repositories http://www.rlg.org/longterm/attribswg.html

![]()

Publishing Information

RLG DigiNews (ISSN 1093-5371) is a newsletter conceived by the members of the Research Libraries Group's PRESERV community. Funded in part by the Council on Library and Information Resources (CLIR) from 1998-2000, it is available internationally via the RLG PRESERV Web site (http://www.rlg.org/preserv/). It will be published six times in 2001. Materials contained in RLG DigiNews are subject to copyright and other proprietary rights. Permission is hereby given for the material in RLG DigiNews to be used for research purposes or private study. RLG asks that you observe the following conditions: Please cite the individual author and RLG DigiNews (please cite URL of the article) when using the material; please contact Jennifer Hartzell at jlh@notes.rlg.org, RLG Corporate Communications, when citing RLG DigiNews.

Any use other than for research or private study of these materials requires prior written authorization from RLG, Inc. and/or the author of the article.

RLG DigiNews is produced for the Research Libraries Group, Inc. (RLG) by the staff of the Department of Preservation and Conservation, Cornell University Library. Co-Editors, Anne R. Kenney and Oya Y. Rieger; Production Editor, Barbara Berger Eden; Associate Editor, Robin Dale (RLG); Technical Researchers, Richard Entlich and Peter Botticelli; Technical Assistant, Carla DeMello.

All links in this issue were confirmed accurate as of February 13, 2001.

Please send your comments and questions to preservation@cornell.edu.

![]()